Introduction

Although everyone is aware of Netflix, have you ever seen any of their videos with subtitles? Since Netflix automates them, this is what data automation is.

What if you taught your AI model to grasp the world, and it was able to do so? Accurate, annotated data is the secret sauce that powers machine learning in a world that is rapidly moving toward automation and intelligence.

Data annotation is the first step in creating chatbots that can identify customer emotion or teaching self-driving automobiles to identify pedestrians.

We’ll go over what data annotation is, its types, its significance, a thorough look at the best tools for 2025, and how actual businesses are utilising it to drive the future in this extensive blog.

For Instance,

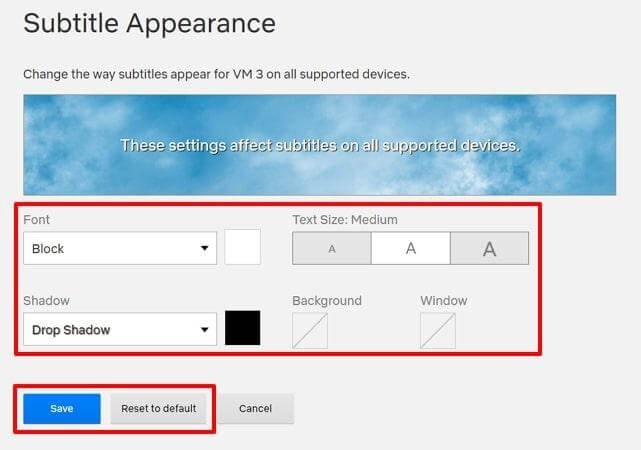

Case Study: Using Audio Annotation to Improve Subtitle Sync on Netflix

Sector: Entertainment & Media

Use Case: Improving Accessibility Type of Annotation: Text and Audio

Background: Netflix wants all users to be able to access its content, especially those who are hard of hearing. Frame-accurate annotations are necessary to align subtitles and captions with dialogue.

Implementation: To make sure that each word, background sound, and speaker change is appropriately tagged, they combine automated speech recognition (ASR) with manual audio annotation. Pauses, emphasis, and non-verbal cues (such as [door creaks], [music fades]) are examples of annotations.

Result: Higher user happiness and accessibility compliance in global markets were the results of improved subtitle accuracy. Additionally, the procedure enhanced Netflix’s multilingual audio engineering and dubbing processes.

Source:

https://www.wired.com/story/netflix-subtitles-ai/

https://netflixtechblog.com/

Chapter 1: Understanding What is Data Annotation?

What is Data Annotation?

The process of labelling data whether it be text, pictures, audio, or video so that computers can comprehend and interpret it is known as data annotation. It is a crucial stage in the creation of machine learning and artificial intelligence models, especially those that use supervised learning.

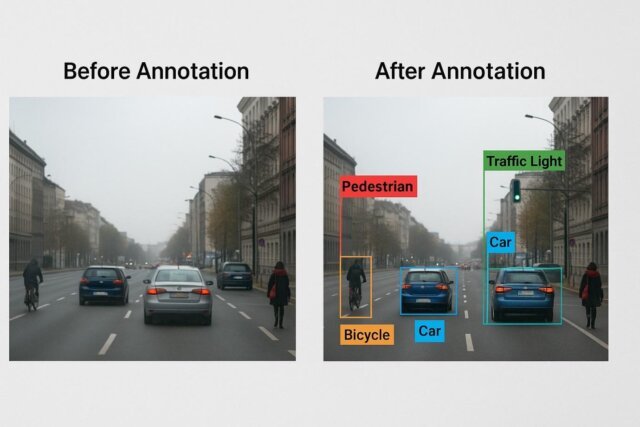

Data becomes structured input that computers may learn from when it is annotated, giving it context and meaning. Annotations could, for instance, identify vehicles, pedestrians, and traffic lights in a picture of a street scene. The vision system of a self-driving car is guided by these annotations.

What is Data Annotation’s Requirement in Machine Learning?

Machine learning (ML) models use examples to identify patterns and generate predictions. A model cannot comprehend what it is meant to learn in the absence of annotated data. It would be impossible to form meaningful connections if you were learning a language without word labels.

The generation of training datasets, which act as AI’s knowledge base, is made possible via data annotation. The outputs of the model are more accurate the better the annotation.

The Function of Annotated Data in Supervised vs. Unsupervised Settings

Annotation forms the foundation of supervised learning. Whether it’s email classification, image object detection, or speech pattern recognition, supervised learning requires high-quality labelled data.

Unsupervised learning, on the other hand, uses pattern recognition or clustering without the use of labels. Annotated datasets are still useful, nevertheless, for testing unsupervised models.

A combination of labelled and unlabelled data is also used by semi-supervised and reinforcement learning algorithms, with annotations frequently serving as incentives or feedback.

Human-in-the-Loop Systems

Despite the rise in automation, people continue to play a crucial role in the data annotation process. Human-in-the-loop (HITL) systems combine automation and human judgment. Data is labelled by annotators, and the system gains knowledge from this input.

Models eventually start to help with labelling, which people then check or fix. This feedback loop increases accuracy and efficiency. In delicate domains like healthcare, where human experience guarantees high-quality annotations, HITL is especially crucial.

An Explanation of What is Data Annotation Workflow

Data Collection: A variety of sources, including social media, cameras, microphones, and sensors, are used to collect raw data.

Pre-processing: Data is prepared for annotation, cleansed, and formatted.

Annotation: Tools or human annotators assign labels to the data according to task-specific specifications.

Quality Control: Consensus models, spot inspections, or automated metrics are used to evaluate labels.

Model Training: Machine learning models are trained using the annotated data.

Feedback Loop: To enhance performance, additional data may be annotated after model predictions are evaluated.

Metrics for Accuracy and Quality Control

Annotation quality is crucial. Inaccurate forecasts and defective models are the result of poorly labelled data. A number of tactics guarantee quality:

The consistency with which various annotators label the same data is measured by inter-annotator agreement.

Gold Standard Datasets: References are taken from known, reliable datasets.

Review Cycles: Labels are audited and corrected by supervisors or knowledgeable annotators.

Automated Validation: Tools check for outliers, discrepancies, or missing labels.

The efficacy of annotation is frequently assessed using metrics like as precision, recall, and F1-score, particularly in test datasets.

Common Obstacles in Annotation

Subjectivity: Particularly when performing tasks like intent classification or sentiment analysis.

Scale: It takes a lot of time to annotate millions of data points.

Cost: Annotations of superior quality might be pricey.

Privacy and Compliance: Particularly when handling biometric or medical data.

Data Drift: Re-annotation is necessary due to changes in data distribution over time.

Annotator Types

Crowdsourced Annotators: Workers from all around the world can annotate data using platforms like Amazon Mechanical Turk.

In-house Teams: Businesses use specialized teams that have received domain-specific training.

Providers Outsourced: Expert businesses offer annotation as a service, frequently with scalability.

Project size, budget, and necessary accuracy all play a role in selecting the best type of annotator. For difficult projects requiring domain knowledge, in-house teams perform well, whereas crowdsourcing is best suited for simpler but higher volume tasks.

Examples from the Real World in Various Industries

Healthcare: Diabetic retinopathy and tumours can be found with the aid of annotated radiography pictures.

Finance: Fraud detection is made possible by labelled transactions.

Retail: Labelled purchase records are the foundation of product recommendation algorithms.

Autonomous Vehicles: Cars can navigate and identify items thanks to annotated street scenes.

Customer service: Chatbots comprehend questions and provide relevant answers by using labelled dialogues.

Additionally, generative models and large language models (LLMs) such as GPT and BERT are trained using annotated data. In the fine-tuning stages of tasks like classification, translation, and summarization, these models rely on labelled samples.

Chapter 2: Data Annotation Types and Their Applications

There is no one-size-fits-all method for data annotation. The nature of your data and the objectives of your machine learning model will determine the kind of annotation that is required.

The main categories of data annotation are shown below, together with thorough use cases, techniques, obstacles, and resources necessary for their implementation.

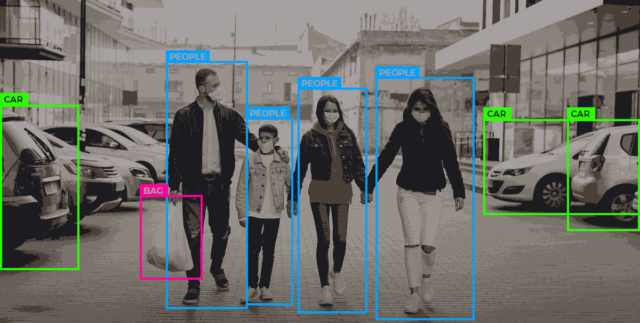

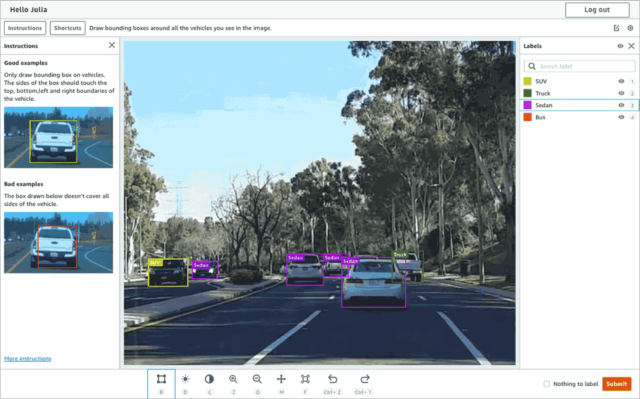

1. Annotation of Images

What It Is:

In order to train computer vision models, image annotation entails labeling images. Bounding boxes, segmentation masks, and classification tags are examples of labels. Annotations may describe particular aspects, such as the form of an organ or the edge of a pedestrian, depending on their intricacy.

Methods:

Bounding boxes: are used to identify and categorize items.

Polygon Annotation: Uneven forms are annotated with polygons; this is frequently used in satellite or medical images.

Semantic Segmentation: Gives every pixel a class.

Instance Segmentation: Differentiating between objects of the same class.

Key Point Annotation: Labelling particular components of an object, such as joints in pose estimation.

Examples of Use:

- Object detection (cars, pedestrians, lanes) in autonomous vehicles.

- Medical imaging: organ labelling, tumour segmentation.

- Virtual try-ons and product recognition are examples of retail and e-commerce.

- Using satellite imagery to identify crop diseases are examples of agriculture.

- Identifying flaws in manufacturing lines are examples of manufacturing.

Challenges:

It takes a lot of work to outline precisely.

complicated images with high inter-annotator variability.

requires subject-matter specialists, such as radiologists for X-rays.

Tools:

- Labelbox

- Computer Vision Annotation Tool, or CVAT

- V7 Darwin

- SuperAnnotate

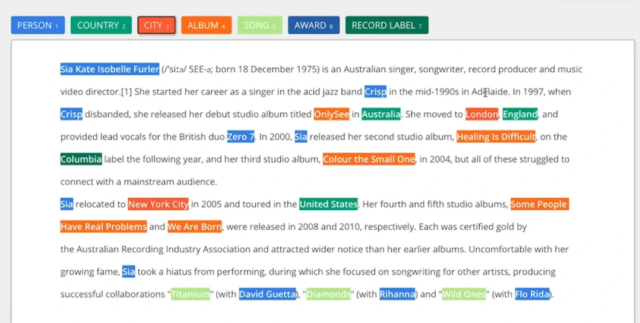

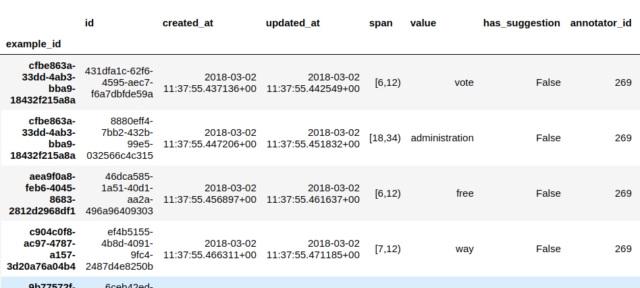

- Annotation of Text

What It Is:

In order to train NLP models, text annotation entails tagging individual words, sentences, or complete texts. Labels aid in the algorithm’s comprehension of entities, intent, syntax, and semantics.

Methods:

Named Entity Recognition (NER) is the process of recognizing names, locations, and organizations.

Sentiment annotation is the process of classifying text as neutral, negative, or positive.

Part-Of-Speech Tagging: labelling verbs, nouns, adjectives, and other words.

Intent Annotation: using intent annotation, which is utilized in chatbots, user inquiries are categorized into intents.

Coreference Resolution: connecting pronouns to nouns.

Examples of Use:

- Virtual assistants and chatbots: Recognizing user intent.

- Healthcare: Noting symptoms and prescriptions in doctor’s notes.

- Finance: Using sentiment and keywords to identify transactions that are dangerous.

- Legal Tech: Classifying clauses in legal documents by annotation.

Challenges:

Interpretation subjectivity (particularly feeling).

Context-related uncertainty.

Intricacy of multiple languages.

Tools:

- Prodigy

- Doccano

- LightTag

- MonkeyLearn

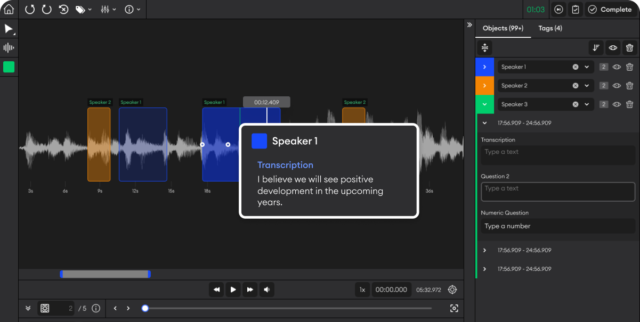

- Annotation of Audio

What It Is:

Sound data is labeled using audio annotation. Speech transcription, background noise tagging, and speaker identity and mood categorization are all included.

Methods:

Speech Transcription: Transcribing speech to text.

Diarisation of Speakers: Differentiating between speakers.

Emotion Detection: Labelling of Speech tone and effect.

Noise Tagging: Recognises audio components that are not spoken.

Examples of Use:

- Alexa and Siri: Voice assistants that can understand commands.

- Call centres: Quality control and sentiment analysis.

- Podcasts: Transcripts produced automatically.

- Security: Gunshot or alarm detection by surveillance systems.

Challenges:

Dialects and accents.

Background sounds.

Requirements for real-time processing.

Tools:

- Audacity (manual annotation)

- Amazon Transcribe

- DeepSpeech and custom toolkits

- Sonix.ai

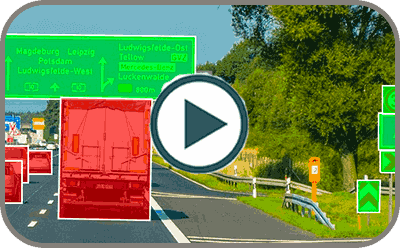

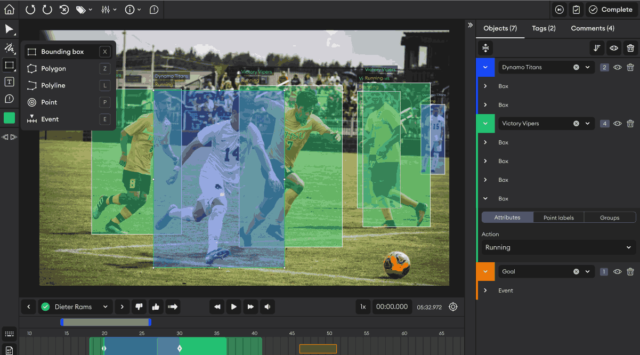

- Annotation of Video

What It Is:

Annotating videos entails labelling frame sequences. It encompasses behaviour prediction, activity detection, and object tracking.

Methods:

Object Tracking: Tracking an object between frames.

Event Annotation: Labelling events or activities (such as a person falling).

Frame by Frame Annotation: Labelling with a time stamp.

Examples of Use:

- Detecting intrusions is part of security and surveillance.

- Detection of lane changes in autonomous vehicles.

- Sports analytics: Monitoring of players.

- Retail: Monitoring consumer behavior in-store.

Challenges:

Processing and storing massive video files.

Speed of real-time annotation.

High level of annotation fatigue.

Tools:

- VGG Image Annotator, or VIA

- CVAT (also for video)

- MakeSense.ai

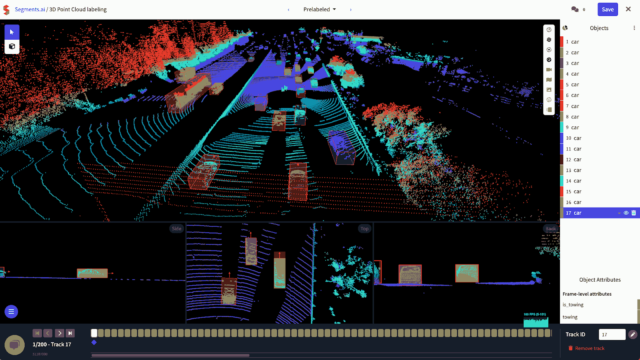

- Annotation of 3D Point Clouds

What It Is:

This entails classifying spatial data from LiDAR sensors and is mostly utilized in robotics and autonomous vehicles.

Methods:

Cuboids: 3D bounding boxes.

Segmentation: Giving each point a classification.

Tracking: Keeping an eye on an object’s motion in space.

Examples of Use:

- Autonomous vehicles: 3D object recognition.

- Robotics: Mapping indoor spaces.

- AR/VR: Object mapping and room structure.

Challenges:

Calls for specialized knowledge.

High usage of RAM.

3D rendering must be handled by the tools.

Tools:

- AI Scale

- Labelbox (3D)

- LiDAR’s SuperAnnotate

- CloudCompare

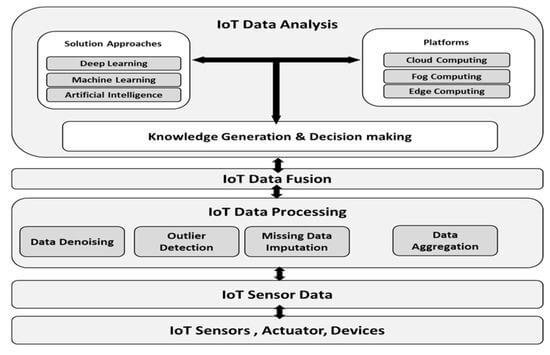

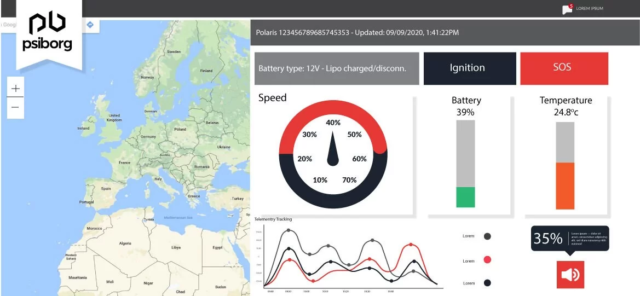

- Annotation of Sensor and IoT Data

What It Is:

This entails annotating time-series data from sensors and is utilized in smart homes, factories, and medical wearables.

Methods:

Event Tagging: Finding important occurrences, such as a machine breakdown.

Pattern Recognition: Annotating recurrent signal patterns.

Multi-Sensor Correlation: Identifying interdependent signals.

Examples of Use:

- Predictive maintenance is part of smart manufacturing.

- Monitoring vital signs in healthcare.

- Wearable technology: tracking activity.

Challenges:

Temporal intricacy.

Integration of multivariate signals.

Poor quality of data.

Tools:

- Edge Impulse

- Matplotlib + custom scripts

- IoT analytics platforms

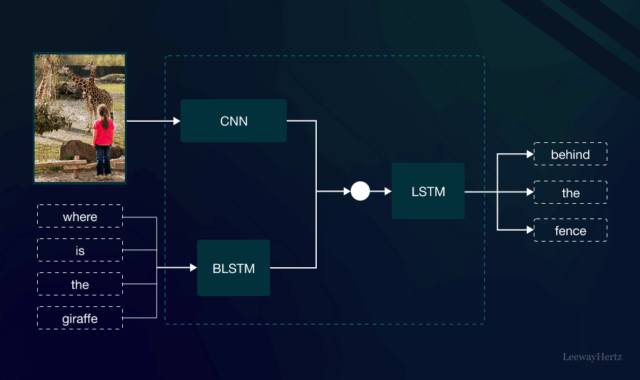

- Multi-Modal Annotation

Multiple data sources, such as text, audio, and video, are combined into a single dataset by multi-modal annotation.

For instance:

Conversational AI: Video + Audio + Text (speech, tone, and facial expressions).

Healthcare: Integrating textual doctor’s notes with X-ray pictures.

Examples of Use:

- Intelligent Surveillance Systems: Examining audio and video streams.

- Autonomous Retail: Integrating transaction records, product photos, and shelf camera footage.

New Types of Annotation in 2025

Newer forms of annotation are appearing as AI systems get more complex:

Emotional Context Annotation: These annotations detect subtle emotions, irony, and sarcasm in addition to sentiment.

Synthetic Data Annotation: Adding tags to synthetic data to enhance authentic datasets.

Real-Time Annotation: Edge computing uses real-time labelling as data is gathered.

Selecting the Proper Type of Annotation

Selecting the appropriate form of annotation is dependent upon:

Data Modalities: Text, image, video, or audio.

Task Objective: Classification, detection, segmentation, or creation.

Model Architecture: Various models (such as CNNs, RNNs, and Transformers) could need various kinds of input data.

Comparison Table

| Data Type | Annotation Type | Example Use Case |

| Text | Sentiment, NER | Chatbots, Reviews |

| Image | Bounding Box, Polygon | Self-Driving Cars |

| Video | Object Tracking, Event Tagging | Surveillance |

| Audio | Emotion, Transcription | Voice Assistants |

| 3D Point Cloud | Cuboid, Segmentation | LiDAR Cars |

| Multi-Modal | Combined Labels | Conversational AI |

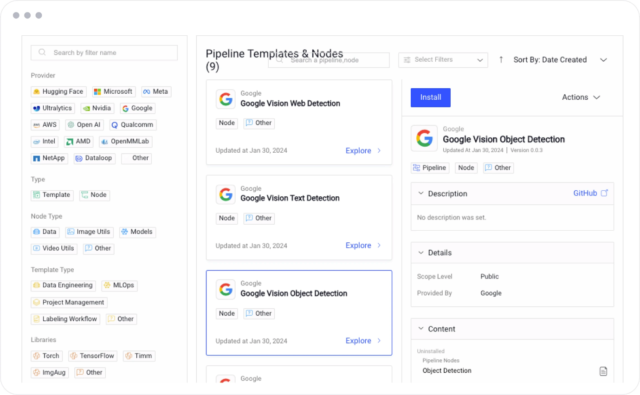

Chapter 3: Tool Guide 2025: The Best Platforms and Tools for Data Annotation

The number of platforms and technologies available to help the annotation process is expanding along with the need for high-quality annotated datasets. Scalability, automation, security, a variety of annotation types, and smooth connection with machine learning pipelines are all requirements for data annotation systems in 2025. A detailed look at some of the most cutting-edge, popular, and enterprise-grade data annotation tools for 2025 can be found below.

- Labelbox

Summary: Labelbox has developed into a complete AI data engine. It allows businesses to centrally label, monitor, and refine training data.

Important attributes:

- Text, audio, video, and image annotation

- Strong automation using labelling aided by machine learning

- Labelling using model assistance and integrating active learning

- SDKs and an API-first architecture

- Workforce management, quality assurance tools, and real-time collaboration

Use Case: For extensive video and image annotation projects, the defense industry and autonomous car manufacturers use Labelbox extensively.

Cost: Personalized business pricing. provides a free trial period.

Website: https://labelbox.com.

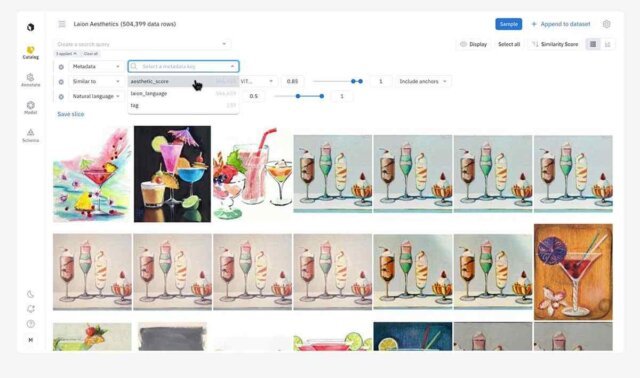

- Scale AI

Overview: A high-end data annotation platform renowned for its strong security measures, scalability, and quality.

Important attributes:

- Annotation pipelines that involve humans in the loop

- Supports text, video, satellite photos, and 3D LiDAR

- Quick turnaround with integrated quality assurance processes

- Combining AI/ML models to promote active learning

Use Case: The U.S. Department of Defense, Lyft, and OpenAI use it. Outstanding for LiDAR annotation in geospatial analytics and autonomous driving.

Pricing: Enterprise-focused premium pricing strategy.

Website: https://scale.com.

- SuperAnnotate

Overview: SuperAnnotate is well-known for its visual-first platform, which emphasizes teamwork and quick annotation workflows.

Important attributes:

- All of the main annotation formats are supported, including key point, polygon, and bounding box.

- Integration of AI models for predictive labelling

- Features for job routing and quality control

- Suitable with Pascal VOC, YOLO, and COCO formats

Use Case: Applied to robotics, medical imaging, and agricultural drones.

Pricing: Provides a free beginning tier and team plans.

Website: https://superannotate.com

- Amazon SageMaker Ground Truth

Overview: Create incredibly precise training datasets using AWS’s fully managed data labeling solution.

Important attributes:

- Workforce integration from Amazon Mechanical Turk

- Text and image data auto-labelling

- Integration with other AWS services (Rekognition, EC2, and S3)

- Pricing that is economical and based on active learning

Use Case: Ideal for businesses that are already a part of the AWS network. widely utilized in the financial technology, cloud infrastructure, and eCommerce industries.

Pricing: Pay as you go according to the volume and type of data.

Website: https://aws.amazon.com/sagemaker/groundtruth

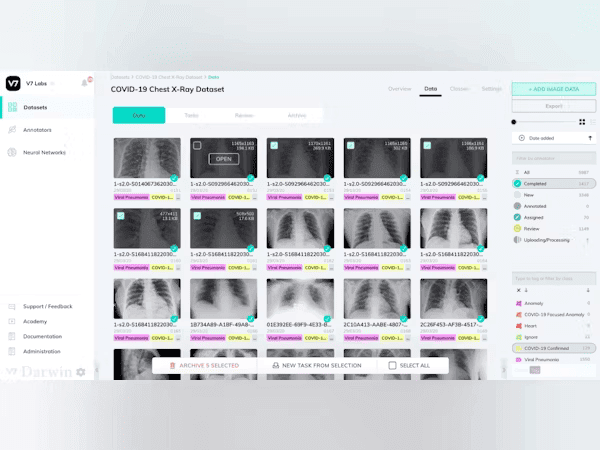

- Darwin V7

Overview: With automated workflows and regulatory compliance, V7 Darwin is made especially for medical annotation and the life sciences.

Important attributes:

- GDPR-compliant and FDA-approved

- Supports the annotation of CT scans, radiography, and microscopy

- Deep learning-based automated label recommendations

- Human-in-the-loop model training and intelligent workflows

Use Case: Annotated MRI and pathology scans are used in hospitals and biotech companies to diagnose unusual disorders.

Pricing: Enterprise-based pricing is used. Academic access is available for free.

Website: https://www.v7labs.com.

- Dataloop

Overview: An end-to-end cloud-based platform that facilitates automated pipelines, data management, and data labeling.

Important attributes:

- Video, text, image, audio, and 3D point cloud annotation

- Data governance and MLOps tools integrated

- Dashboards with advanced analytics for label QA

Use Case: Applications include smart city surveillance, retail, and agricultural.

Pricing: Free trial available; premium levels are determined by the quantity of the dataset.

Website: https://dataloop.ai

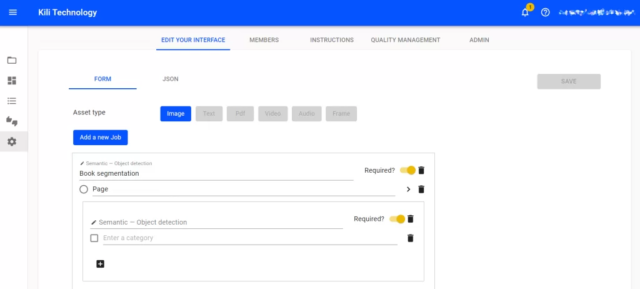

- Kili Technology

Overview: Kili’s collaborative platform prioritizes smooth labeling processes, model feedback loops, and quick iterations.

Important attributes:

- Support for several data types, such as OCR and PDF annotation

- Monitoring employee and label performance

- AI-driven quality assessment

- Support for CLI and API

Use cases: it includes document digitization, insurance claims, and financial services (invoice annotation).

Pricing: Personalized cost. There is a trial version available.

Website: https://kili-technology.com

- Appen (formerly Figure Eight)

Overview: With a global workforce and enterprise AI integration, Appen provides one of the biggest platforms for human-annotated data.

Important attributes:

- Speech, CV, NPL, and multi-modal annotations are supported.

- Provides datasets with labels.

- AI-assisted labelling and humans in the loop

- Excellent security features and scalability

Use Case: Voice AI training, social media, and advertising technology.

Pricing: Tailored plans according to complexity and volume.

Website: https://appen.com is the website.

- LightTag (for annotation of text)

Summary: LightTag is an easy-to-use platform designed for enterprise NLP teams working on text-based applications.

Important attributes:

- NER and emotion tagging with support for multiple users

- Integration of models and active learning

- Workflows for QA and confidence scoring

Use Case: Customer service, legal technology, and social listening initiatives.

Pricing: Upon request.

Website: https://lighttag.io

Comparison Table

| Tool Name | Supported Data Types | Automation | Key Industries |

| Labelbox | Image, Video, Text, Audio | Yes | Defense, Retail |

| Scale AI | 3D, Text, Satellite | Yes | Autonomous Driving, Government |

| SuperAnnodate | Image, Video | Yes | Medical, Robotics |

| SageMaker CT | Image, Text | Yes | eCommerce, Cloud |

| V7 Darwin | Medical, Bio | Yes | Healthcare |

| Dataloop | 3D, Video, Audio | Yes | Retail, Agriculture |

| Kili Technology | PDF, OCR | Yes | Finance, Insurance |

| Appen | Multi-Modal | Yes | Voice AI, Social Media |

| LighTag | Text only | Yes | NLP, Legal |

Important Trends in the Tool Ecosystem for 2025

AI-Assisted Annotation Is Commonplace: Active learning and predictive labeling are currently used by all tools.

Multi-Modality Support: At least three different kinds of data must be handled by platforms.

Privacy and Regulation Compliance: Compliance with privacy and regulations is particularly important in the government, healthcare, and financial industries.

Custom Workflow Automation: For end-to-end ML pipeline integration, clients want technologies with robust API compatibility.

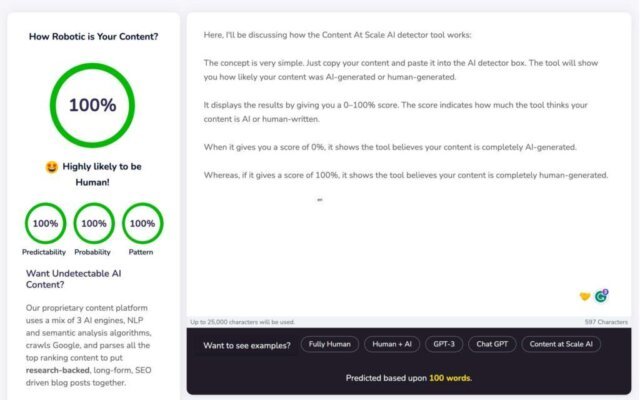

Clear Guidelines for Best Practices in Data Annotation

Ensure Quality: To ensure uniformity, give annotators thorough guidelines.

Training: Inform annotators of the task’s particular requirements.

Quality Checks: To find and fix mistakes, conduct routine reviews.

Moral Aspects to Take into Account

Privacy: Verify that procedures for data annotation adhere to privacy laws.

Mitigating Biases: Recognize any biases in data and work to reduce them.

The Prospects of AI Support and Data Annotation Automation

Automation and AI Assistance

More automated annotation procedures are being made possible by AI advancements, which lessen the need for human labelling and accelerate the creation of AI models.

Participation of the Community and Crowdsourcing

Crowdsourcing is being used more and more by platforms to annotate massive datasets, bringing a worldwide community into the AI development process.

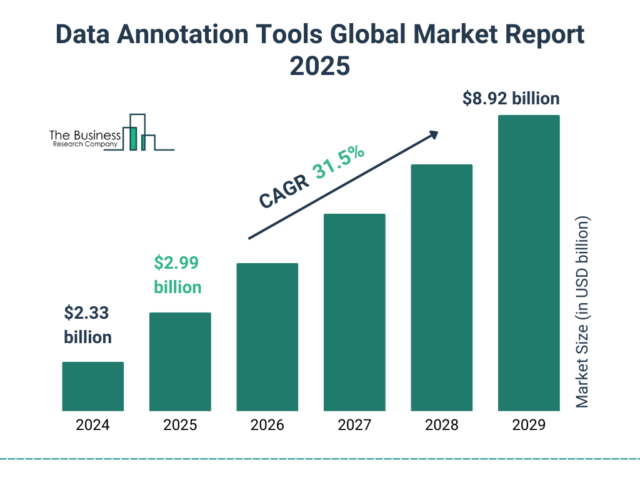

The Future: There Will Continue to Be Demand

Businesses will keep pushing for training data pipelines that are faster, more contextual, and more accurate as AI becomes more pervasive in society. In reaction, we’ll observe:

Increased spending on cloud-based annotation systems

Growing use of real-world annotations combined with generated data

More stringent guidelines for ethical labelling and data privacy compliance

Multilingual and cultural annotations are becoming more and more necessary to support worldwide AI deployments.

This growth is qualitative as well as quantitative. In addition to more data, businesses will require better, cleaner, and more sophisticated data that is suited to particular AI use cases.

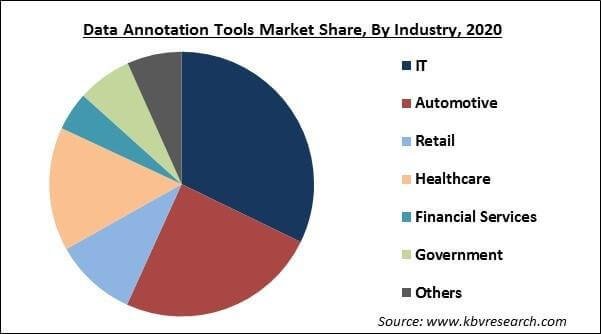

The Future Global Data Market Comparison

| Year | Global Market Size (USD) | CAGR |

| 2023 | $1.59 Billion | – |

| 2025 | $3.8 Billion | 34.2% |

| 2030 | $13.5 Billion | – |

Source:

https://www.fortunebusinessinsights.com/data-annotation-tools-market-106777

The Increasing Need in 2025 for Data Annotation

Data annotation has evolved from a back-end requirement to a key component of AI development as we move farther into the AI-driven era. The need for labelled data is not only increasing, but also blowing out in 2025.

However, what precisely is behind this extraordinary upsurge?

- Growth in Self-Driving Technologies

Labelled spatial data is essential for the safe and effective navigation of physical surroundings by industrial robots and warehouse automation systems.

An autonomous system relies on historical data for all of its decisions, and for the algorithms to “see,” “sense,” and “decide,” the data must be perfectly labelled. The demand for scalable and precise annotation platforms is growing as a result of significant investments made in real-time model training by firms like as Tesla, Waymo, and NVIDIA.

- Growth in Healthcare Solutions Driven by AI

AI in healthcare is experiencing a resurgence, especially in fields like:

AI’s use in medical imaging (X-rays, MRIs, and CT scans) to identify illnesses like pneumonia and cancer.

Pathology, where cellular classification is aided by annotated microscope slides.

Natural language processing is utilized in Electronic Health Records (EHR) to obtain valuable information.

The annotation workforce needs to grow and specialize quickly because companies like Google Health, IBM Watson, and Aidoc are creating models that require highly domain-specific annotation by medical specialists.

Anticipate the rise of specialized annotation businesses in 2025 that are solely focused on the healthcare industry, able to offer platforms that comply with HIPAA regulations and facilitate safe communication between radiologists and physicians.

- NLP Growth in Legal Technology and Finance

High-quality annotated text is essential, as the banking, financial, and legal sectors have adopted Natural Language Processing (NLP) at an exponential rate.

Among the use cases are:

- Summarization of documents

- Financial news sentiment analysis

- Examining the contract and extracting clauses

- Fraud detection with transaction tracking

For example, JP Morgan’s COiN software interprets commercial loan agreements using machine learning. Clean, organized, and labelled language datasets are necessary for each of these models to function.

The complexity and worth of enterprise-grade annotation services are further increased by the requirement that annotation platforms in these sectors include traceability, version control, and audit trails due to laws like the CCPA and GDPR.

- New Multimodal Models and Generative AI

The proliferation of Generative AI (GenAI) in programs such as ChatGPT, Midjourney, Runway, and Sora is expanding the list of requirements for annotated datasets.

Multimodal datasets, which integrate and link text, audio, video, and images

Contextual annotation that takes tone, intent, and emotion into account

Quick engineering feedback that annotates user inputs and outputs to enhance performance

Large volumes of cross-annotated data are needed to train multimodal transformers such as GPT-4o or Gemini 1.5, which frequently require real-time human-in-the-loop (HITL) review cycles. The need for interactive, AI-assisted annotation tools that can operate across domains in unified workflows has increased as a result.

Real-World Case Studies to Understand What is Data Annotation

Case Study 1: Accuracy via Data Annotation in Tesla’s Autonomous Driving

Industry: AI/Automotive Use Case: Self-Driving Cars

Type of Annotation: Video and Image Annotation

Background: To identify and respond to real-world situations, Tesla’s Full Self-Driving (FSD) system mostly depends on annotated data. The business continuously trains its computer vision models using millions of photos and video frames taken by its fleet.

Implementation: To name things such as pedestrians, traffic signals, lane boundaries, animals, and cars in different weather and lighting circumstances, Tesla uses a proprietary in-house data annotation pipeline. Tesla’s Autopilot functions are enhanced by real-time annotation upgrades.

Result: This procedure assisted Tesla in greatly lowering intervention rates and enhancing performance in the actual world, particularly in intricate urban settings. Their human-in-the-loop annotation-powered continuous learning loop allows for quicker rollouts of enhanced

Source:

https://www.tesla.com/en_GB/blog/all-tesla-cars-being-produced-now-have-full-self-driving-hardware

https://spectrum.ieee.org/how-teslas-autopilot-sees-the-road

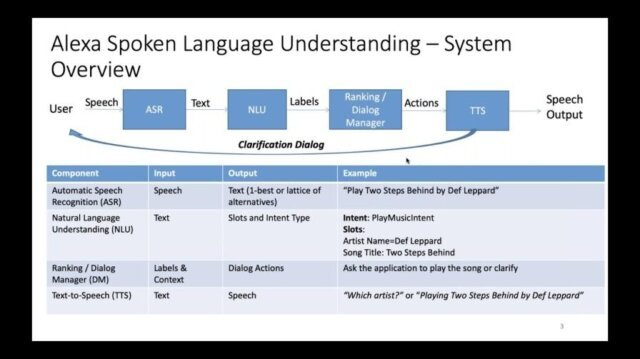

Case Study 2: Scaling Natural Language Understanding with Amazon Alexa

Industry: Natural Language Processing (NLP) is the use case for voice AI in the consumer technology sector.

Type of Annotation: Audio and Text Annotation

Background: In order to comprehend a wide range of user intents across languages, dialects, and accents, Amazon’s Alexa relies on enormous volumes of annotated conversational data.

Implementation: Voice commands with intent, sentiment, and contextual elements are manually transcribed and labeled by annotation teams (e.g., “Play Shape of You” → {intent: play music, artist: Ed Sheeran}). To guarantee linguistic diversity, Amazon employs both expert and crowdsourced annotators in various worldwide locations.

Result: By lowering misunderstandings and enhancing the user experience across more than 20 languages, the annotated dataset assisted Amazon in greatly increasing Alexa’s NLU accuracy.

Source:

https://www.amazon.science/

https://voicebot.ai/2023/09/15/amazon-enhances-alexa-ai-language-support/

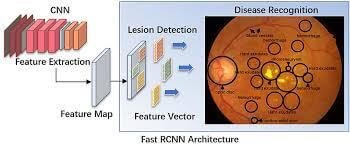

Case Study 3: Detecting Diabetic Retinopathy with AI in Google Health Use Case: Disease

Industry: Healthcare / Medical Imaging Annotation Type: Image Annotation (Retinal Scans)

Background: To identify diabetic retinopathy in retinal scans, Google Health created an AI system. A large amount of well-labelled medical data is necessary for accurate detection.

Implementation: To identify the existence and severity of retinopathy in thousands of retinal pictures, Google partnered with ophthalmologists throughout the world. To minimize subjectivity and guarantee agreement, each image was labelled by a number of experts.

Result: A deep learning model with diagnosis accuracy on par with board-certified ophthalmologists was trained thanks to the annotated dataset. at order to lessen blindness brought on by delayed diagnosis, it was used at clinics throughout Thailand and India.

Source:

https://health.google/intl/en_in/

https://jamanetwork.com/journals/jama/fullarticle/2675024

Conclusion:

Annotated data is the foundation of intelligent systems, ranging from conversational AI to self-driving cars. The need for high-quality annotation, along with the tools and techniques available, is only growing as 2025 draws near.

You can remain ahead of the curve and future-proof your AI initiatives by knowing what data annotation actually entails and selecting the appropriate tools.

This guide gives you all the information you need to understand the rapidly changing field of data annotation, regardless of your role data scientist, start-up founder, or product manager.

Are you prepared to begin? Compare tools and start your own annotation project right now!