Introduction: Appropriate Data Management Strategy Tools in 2025, Your Data Strategy Will Either Succeed or Fail

“Are your present data tools prepared for decentralized architectures, AI integration, and real-time analytics?”

“Is your company still using antiquated systems in an era of cloud computing and the Internet of Things?”

“What if other businesses are already utilizing tools that provide insights ten times faster?”

Welcome to 2025, a time when data is your biggest strategic differentiator and not just a business asset.

The problem is that without the appropriate Data Management Strategy Tools to support it, even the strongest data strategy will fall short. The rules of the game have drastically altered as a result of the sudden influx of data from Artificial Intelligence (AI), IoT, SaaS platforms, and hybrid workforces. Companies can no longer rely on inflexible, antiquated systems to promote innovation, manage data lifecycles, and guarantee compliance.

The top companies of today use a contemporary collection of Data Management Strategy Tools that:

- adapts to both decentralized and centralized settings (see data fabric and mesh).

- allows for real-time, AI-ready governance and pipelines,

- protects private information with integrated GDPR, HIPAA, and other compliance

- gives data scientists and business users self-service capabilities.

This blog article explores the top-tier Data Management Strategy Tools for 2025, covering topics like:

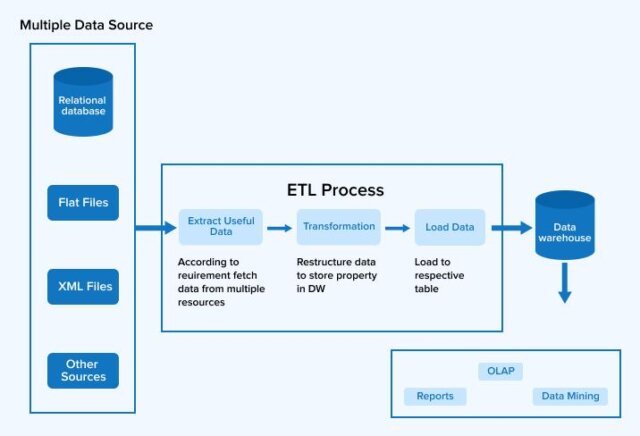

- Integration of data with ETL/ELT,

- Data cataloguing and governance,

- Monitoring of quality,

- Administration of master and metadata,

- Automation of privacy and security,

- AI-enabled real-time analytics.

You will discover:

- Real-world instances from businesses like as Siemens, Maersk, Netflix, and Snowflake.

- Tables that compare top tools based on deployment choices, pricing, and usefulness.

- Use best practices to inform your IT stack choices according to industry demands and your degree of maturity.

This guide will assist you in finding the appropriate Data Management Strategy Tools to turn your plan into quantifiable results, regardless of whether you’re a data engineer creating pipelines or a CDO creating an enterprise-wide strategy.

The data management toolkit that will determine business performance in 2025 and beyond will be examined.

A Data Management Strategy Tool

A data management strategy tool is a software application, platform, or framework that facilitates the development, implementation, and oversight of a company’s data management strategy. Effective data handling, storing, integration, security, and control are made simpler for an organization by these technologies. They ensure that data is correct, consistent, available, and protected throughout its existence.

Data management strategy tools sometimes consist of a collection of products or integrated services that address several aspects of data management, including as analytics, warehousing, governance, data quality, and integration, rather than being a single solution.

The Essential Components of Data Management Strategy Tools

| Component | What It Does | Popular Tools |

| 1. Data Integration | Connects and combines data from multiple sources (batch or real-time). | Talend, Apache NiFi, Informatica, Fivetran |

| 2. Data Warehousing | Stores structured data for reporting and analytics. | Snowflake, Amazon Redshift, Google BigQuery |

| 3. Data Governance | Establishes roles, policies, and processes for data usage and accountability. | Collibra, Alation, Informatica Axon |

| 4. Data Quality | Validates, cleanses, and enriches data. | Informatica DQ, Talend DQ, Trifacta |

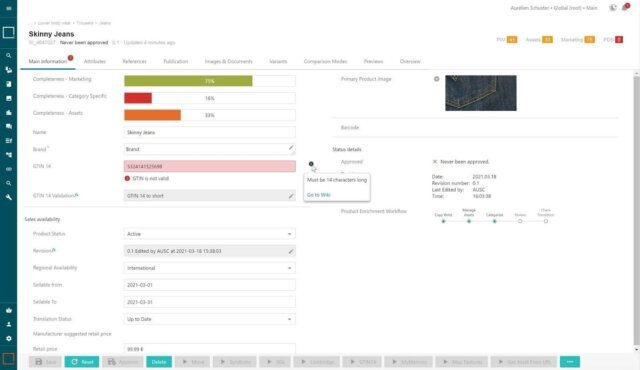

| 5. Master Data Management | Maintains consistent, accurate records for core entities (e.g., customers, products). | Informatica MDM, Reltio, IBM InfoSphere |

| 6. Data Security & Privacy | Protects sensitive data through access control, encryption, and audits. | BigID, Varonis, Immuta |

| 7. Data Cataloging | Indexes and documents data assets for easy discovery and trust. | Alation, Atlan, Data.world |

| 8. Metadata Management | Tracks technical and business metadata for traceability and understanding. | Apache Atlas, Collibra, Informatica Metadata Manager |

| 9. Data Lineage | Shows how data moves and transforms across the pipeline. | Apache Atlas, Manta, OvalEdge |

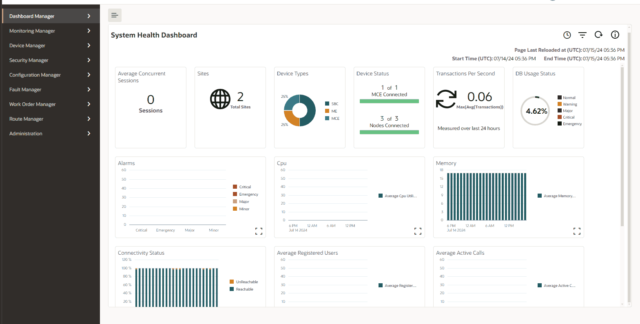

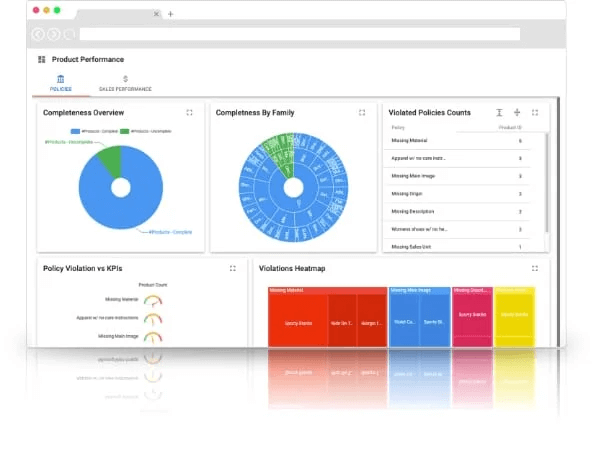

| 10. Analytics & BI | Enables insights through dashboards, reports, and visualization. | Tableau, Power BI, Looker |

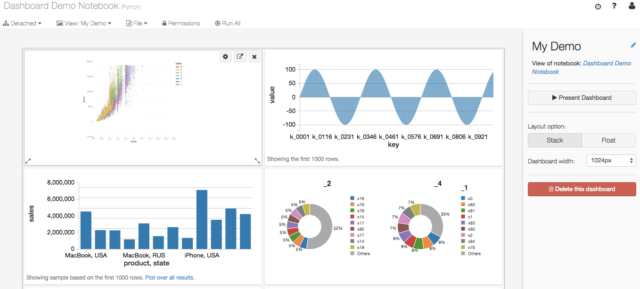

| 11. Collaboration Tools | Facilitates teamwork with shared workflows, tagging, comments, approvals. | Atlan, Collibra, Notebooks in Databricks |

The Significance of Data Management Strategy Tools

Data is the new oil, but only if it’s actionable, reliable, and easily accessible. You can get there with the use of a data management plan tool.

- Integrated Data Administration

Reduces silos by enabling centralized control over all data-related operations.

Example:

As an illustration, a global business integrates customer data from marketing platforms, CRM, and ERP using Talend.

- Adherence to Regulations

Maintains your adherence to laws including the HIPAA, CCPA, and GDPR.

Example:

For instance, a healthcare provider uses Collibra to manage compliance and store information.

- Consistency and Accuracy of Data

Decreases reporting and forecasting mistakes by improving data quality across all platforms.

Example:

As an illustration, to keep a single rendition of the truth for client profiles, a bank uses Informatica.

- Improved Business Choices

AI and analytics are powered by timely, high-quality data, which makes judgments more intelligent.

Example:

For instance, a shop analyses consumer behaviour in actual time using Snowflake and Power BI.

- Democratization of Data

Uses self-service dashboards and tools like data catalogues to make data accessible to non-technical people.

Example:

As an illustration, a startup uses Alation to facilitate department-wide, user-friendly data search and discovery.

Data Management Strategy Tools

The foundation of contemporary data ecosystems in 2025 will be formed by the 15 key types of data management strategy tools examined in this section.

Whether it’s guaranteeing data governance and quality, coordinating intricate pipelines, or facilitating machine learning at scale, each area tackles distinct possibilities and difficulties.

Gaining knowledge of these areas and the top platforms for them will enable you to create a solid, flexible, and future-proof data strategy that meets the demands of your company.

Before we begin to extend each area, below is a summary table of the data management strategy tools that have been categorized.

| Category Number | Category Name | Primary Focus | Example Tool(s) |

| 1 | Data Integration Tools | Data ingestion and synchronization | Fivetran, Talend |

| 2 | Data Warehousing Tools | Centralized data storage and querying | Snowflake, Redshift |

| 3 | Data Governance Tools | Policy enforcement and compliance | Collibra, Alation |

| 4 | Data Quality Tools | Data cleansing and validation | Talend Data Quality, Ataccama |

| 5 | Data Security Tools | Data protection, encryption, and access control | Immuta, Privacera |

| 6 | Metadata Management Tools | Managing data about data (metadata) | Informatica Metadata Manager |

| 7 | Data Lineage & Catalogue Tools | Tracking data origin and cataloging | MANTA, Octopize |

| 8 | Data Modelling Tools | Designing data structures and schemas | Erwin, SAP PowerDesigner |

| 9 | ETL/ELT Tools | Extract, Transform, Load/Load processes | Apache NiFi, Matillion |

| 10 | Data Orchestration & Pipeline Tools | Automating and scheduling data workflows | Apache Airflow, Prefect |

| 11 | Data Observability & Monitoring Tools | Monitoring data quality and pipeline health | Monte Carlo, Bigeye |

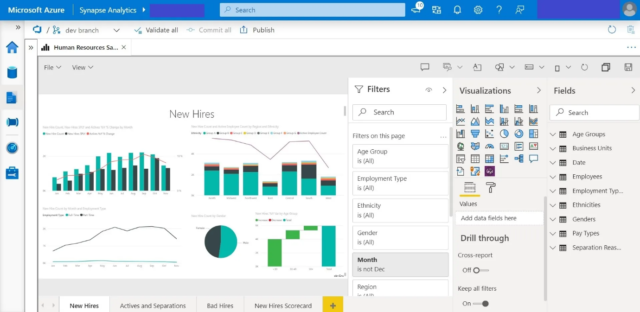

| 12 | Business Intelligence & Analytics Tools | Data visualization and reporting | Tableau, Power BI |

| 13 | Cloud Data Platforms & Warehouses | Cloud-native data storage and compute | Google BigQuery, Azure Synapse |

| 14 | Master Data Management (MDM) Tools | Creating single source of truth for master data | Informatica MDM, SAP MDG |

| 15 | Data Science & Machine Learning Platforms | Building, training, and deploying ML models | Databricks, AWS SageMaker |

Let’s break down each of the 15 data management strategy tools categories.

Category 1: Data Integration and ELT Tools

The best data management strategy tools in 2025 assist both technical and business teams by emphasizing real-time ingestion, schema change, scalability, and no/low-code development.

The following are the most important tools to think about:

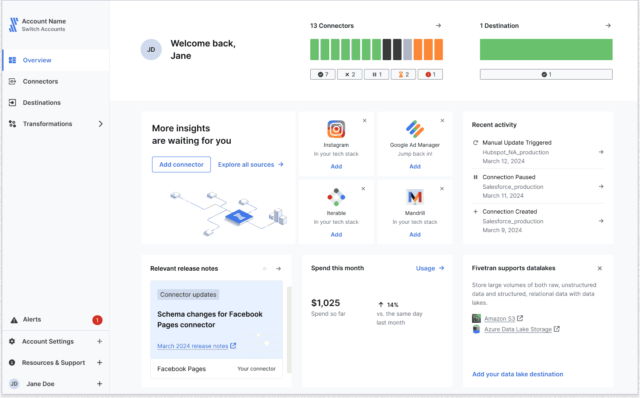

- Fivetran

Goal: The goal of Fivetran is to streamline data pipelines from more than 300 sources to well-known cloud data warehouses using a state-of-the-art, fully managed ELT platform.

Key features:

- Pre-made connections for Google Analytics, Shopify, Salesforce, Workday, and other platforms.

- Plan for auto-updates and change management.

- Allows for the reloading of past data and incremental syncs.

- Cloud-native and requires less setup.

Real-World Illustration:

For real-time business intelligence, Square (Block, Inc.) uses Fivetran to feed client transaction data into Snowflake. Without creating manual pipelines, this enables data scientists and product managers to keep an eye on performance across Square’s payment devices.

Best Practices:

- For no-code ELT in hectic analytics settings, use Fivetran.

- For post-ingestion transformation, combine with dbt.

- To guarantee data accuracy in reporting, keep an eye on the freshness of the connection.

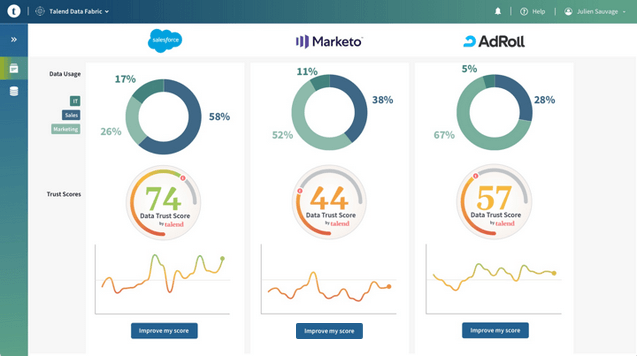

- Talend

Goals: An all-inclusive enterprise and open-source platform covering governance, quality, and data integration.

Key features:

- ETL flow design with a rich GUI.

- Integrated data transformation, cleaning, and profiling.

- Integration with cloud storage, Hadoop, and Kafka.

- Assistance for enterprise-level data governance.

Real-World Illustration:

Using Talend to link heterogeneous marketing, point-of-sale, and delivery systems worldwide, Domino’s Pizza consolidated over 85 million consumer profiles. Conversion rates increased as a result of the ability to provide tailored offers based on behavioral segmentation.

Best Practices:

- To identify problems early in the process, make use of Talend’s integrated data quality module.

- For scalability processing across hybrid architectures, use Talend Cloud.

- For businesses that require close interaction between governance procedures and data pipelines, Talend is perfect.

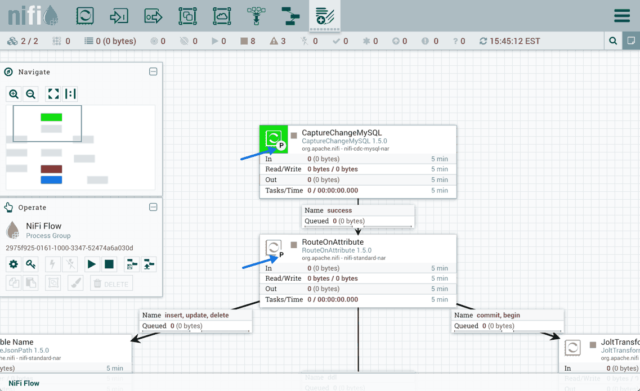

- Apache NiFi

Goals: A platform for flow-based programming that automates and controls data flow across systems.

Key features:

- Drag-and-drop interface with a GUI.

- Fine-grained management of queueing, retries, and backpressure.

- More than 200 CPUs are supported by default.

- Support for real-time streaming from data lakes to edge devices.

Real-World Illustration:

Massive amounts of telemetry data from space missions and satellites are ingested and processed by NASA’s Jet Propulsion Laboratory using NiFi. With assured delivery and real-time filtering, the system sends data to AWS for additional processing and storage.

Best Practices:

- Perfect for the hybrid data environments that need to transfer data between cloud, fog, and edge layers.

- Integrate with Pulsar or Apache Kafka for continuous streaming ingestion.

- For data lineage and auditing, use NiFi’s provenance tracking.

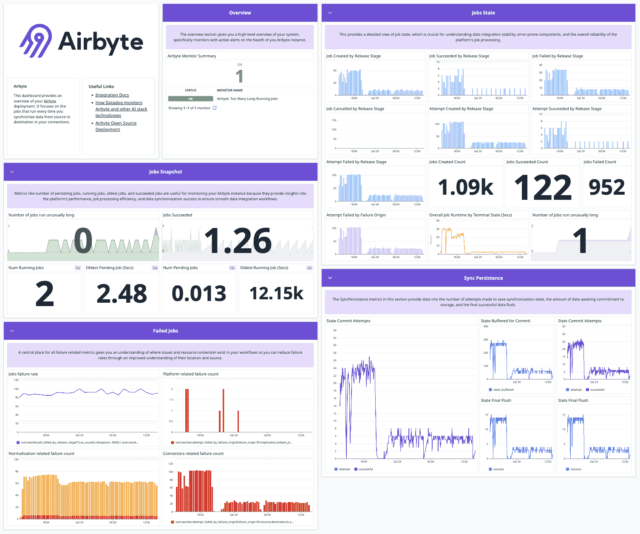

- Airbyte

Goals: An open-source ELT platform with an emphasis on community-driven connection development, simplicity, and flexibility.

Key features:

- More than 300 unique and pre-built connections.

- Supports incremental loading and Change Data Capture (CDC).

- Deployment that is driven by UI or API.

- There are alternatives for self-hosted or cloud-managed systems.

Real-World Illustration:

Airbyte is used by SafeGraph, a geographic intelligence data provider, to collect and standardize location datasets from internal systems and public APIs into Snowflake, which powers machine learning models and dashboards that are visible to customers.

Best Practices:

- For technical teams that use open-source tools, Airbyte is perfect.

- For observability and transformation, use it in conjunction with DBT.

- Excellent for startups and medium-sized groups in need of affordable, adaptable ELT.

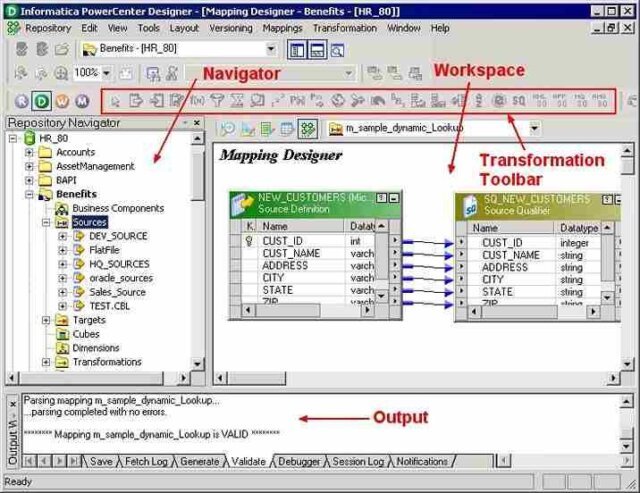

- Informatica PowerCenter

Goal: is a mature enterprise-grade ETL platform utilized by regulated sectors and large-scale operations.

Key features:

- Visual ETL operations, including rollback and error handling.

- Role-based access with strong security integration.

- Support for both batch and real-time processing.

Comprehensive integration with legacy systems, ERP, and CRM.

Real-world Example:

General Electric (GE) uses PowerCenter to connect IoT, ERP, and CRM data from many business divisions. The technology complies with SOX and HIPAA regulations while providing uniform master data throughout supply chains and finances.

Best Practices:

- Best utilized in contexts that require version control, strict governance, and audit trails.

- For comprehensive governance combine with Axon and Informatica Data Quality.

- Scale up to the Intelligent Data Management Cloud (IDMC) for AI/ML capabilities.

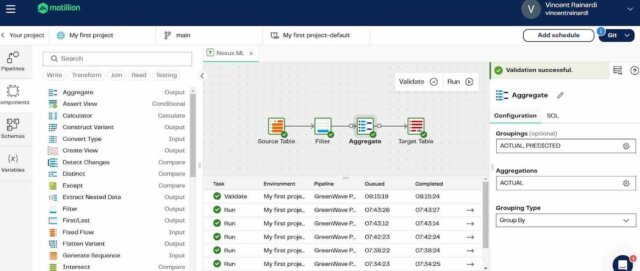

- Matillion

Goal: it is a cloud-based data transformation tool developed for contemporary platforms such as Snowflake, Redshift, and BigQuery.

Key features:

- Job orchestration that is visual and does not need coding.

- DevOps integration for CI/CD data pipelines.

- Built-in connections and transformations.

- Scalable and serverless deployment.

Real-world Example:

Cisco Systems utilizes Matillion to orchestrate and convert data across AWS and Azure, allowing product teams to launch dashboards in hours rather than days. Matillion’s strong connection with Snowflake enables faster financial reporting and customer segmentation.

Best Practices:

- Matillion is perfect for cloud-first businesses with big dispersed data teams.

- Combine with version-controlled database projects to facilitate collaborative development.

- Use Matillion’s orchestration to handle dependencies between pipeline steps.

Data Management Strategy Tools Summary Table: Data Integration and ELT Tools Comparison

| Tool | Best For | Deployment | Real-Time Support | Notable User |

| Fivetran | Plug-and-play ELT | Cloud-managed | Yes | Square |

| Talend | Integration + Data Quality | Cloud/On-Prem | Yes | Domino’s |

| Apache NiFi | Flow-based, edge-to-cloud ingestion | Open-source | Yes | NASA |

| Airbyte | Open-source custom ELT | Cloud/Self-hosted | Partial | SafeGraph |

| Informatica PowerCenter | Enterprise-grade ETL with governance | On-prem/Cloud | Yes | GE |

| Matillion | Cloud-native transformation | Cloud | Yes | Cisco |

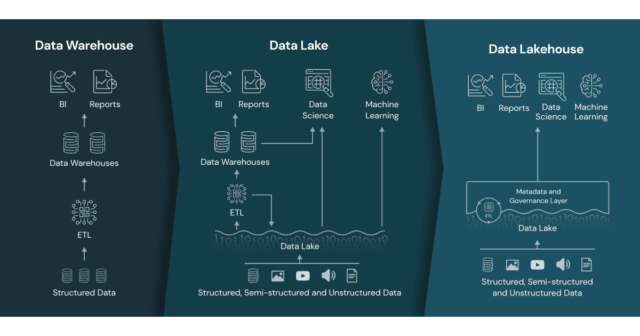

Category 2: Data Warehousing Tools

They provide the basis of a data strategy, allowing businesses to mix unorganized, organized, and semi-structured data to get actionable insights.

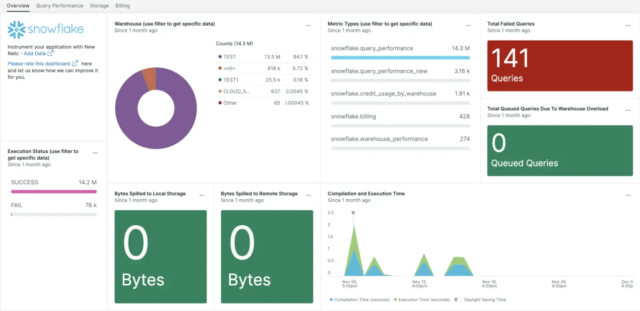

- Snowflake

Goal: is a based on the cloud data warehousing platform that supports scalable analytics throughout multiple clouds (AWS, Azure, and GCP).

Key features:

- Multi-cluster computing allows for workload segregation.

- Time travel and zero-copy cloning are used for versioning.

- Native support for data that is semi-structured (JSON, Avro, and Parquet).

- Snowpark supports Python, Java, and Scala transformations.

Real-world Example:

PepsiCo uses Snowflake to unify their global sales, supply chains, and marketing data. This unified infrastructure provides monitoring in real time, allowing executives to adjust inventory and pricing strategies based on client behaviour trends.

Best practices:

- Use Snowflake’s auto-scaling compute clusters to maximize performance.

- Utilize RBAC, or role-based access control, to manage data access across many departments.

- Implement Snowpark for combined machine learning operations in the warehouse.

- Amazon Redshift

Goal: is a completely administered, petabyte-scale data warehouse solution from AWS that enables low-latency querying and seamless integration with the AWS ecosystem.

Key features:

- Massive parallel processing (MPP).

- Integration of AWS Glue, S3, and Kinesis.

- Redshift Spectrum allows you to query S3 data without loading it beforehand.

- Materialized views and result caching improve speed.

Real-world Example:

Yelp utilizes Redshift to evaluate reviews from customers, clicks, and search behavior in order to make more tailored suggestions. Redshift Spectrum allows data analysts access to S3 data lakes instead of duplicating storage.

Best practices:

- Integrate Redshift with Amazon QuickSight to create quick, serverless BI dashboards.

- Short query acceleration (SQA) can help you enhance report performance.

- Partition S3 data using Redshift Spectrum to speed up federated queries.

- Google BigQuery

Goal: is a serverless, high-speed, and scalable data warehouse from Google Cloud Platform.

Key features:

- SQL-based querying with integrated machine learning (BigQuery ML).

- Federated query of external data sources (such as Cloud Storage and Sheets).

- Pricing options include pay-per-query and flat-rate.

- Integration with Looker, Data Studio, and Vertex AI.

Real-world Example:

Spotify utilizes BigQuery to monitor user behaviour and song metadata in order to create curated playlists and enhance recommendation algorithms. Queries run in seconds, in spite of analysing billions of events.

Best practices:

- Use BigQuery ML to train models directly in the warehouse.

- Avoid SELECT * queries in favor of partitioning and clustering.

- Utilize materialized views and BI Engine to create quicker dashboards.

- Azure Synapse Analytics

Goal: is a unified analytics platform that integrates data warehousing and large data processing inside the Microsoft ecosystem.

Key features:

- Dedicated and server-less SQL pools.

- Native Spark engine for massive data transformation.

- Deep integration between Power BI and Microsoft Purview.

- Data integration using Synapse Pipelines.

Real-world Example:

Marks & Spencer utilizes Azure Synapse to connect transactional, CRM, and supply chain data to create predictive models for inventory optimization, hence increasing stock availability while decreasing waste.

Best practices:

- Select dedicated pools for high-performing, organized analytics; server-less pools for exploratory work.

- Combine Synapse with Microsoft Defender to improve data governance and compliance.

- Pipeline caching and parallel copy can help you optimize your data pipelines.

- Databricks Lakehouse

Goal: is a comprehensive platform that integrates data lake and warehouse abilities for developed analytics and AI.

Key features:

- Built with Apache Spark and Delta Lake.

- ACID transactions and schema enforcement.

- Collaboration notebooks (Python, Scala, SQL, and R).

- Deep ML integration using ML flow and Auto ML.

Real-world Example:

HSBC uses Databricks Lakehouse to combine structured transaction information with unstructured customer feedback to create powerful NLP models that detect fraudulent tendencies and boost customer happiness.

Best practices:

- Delta Lake provides versioned, real-time data dependability.

- Segment computing using Unity Catalogue to control data access across organizations.

- Use notebooks to encourage cooperation between data engineers and scientists.

Data Management Strategy Tools Summary Table: Data Warehousing Tools Comparison

| Tool | Best For | Deployment | ML Integration | Notable User |

| Snowflake | Cloud-native, multi-cloud warehousing | Multi-cloud | Snowpark | PepsiCo |

| Amazon Redshift | AWS-based analytics & reporting | AWS Cloud | SageMaker (indirect) | Yelp |

| Google BigQuery | Serverless analytics + ML | GCP | BigQuery ML | Spotify |

| Azure Synapse | Microsoft ecosystem integration | Azure Cloud | Synapse ML | Marks & Spencer |

| Databricks Lakehouse | Unified warehouse + AI/ML | Multi-cloud | Native MLflow | HSBC |

Category 3: Data Governance and Cataloguing Tools

In the present moment of data democracy and regulation requirements, data governance and cataloguing technologies are critical to ensuring compliance, reliability, and accessibility. These solutions offer lineage tracing, data quality tracking, policy enforcement, and metadata administration for large-scale data environments, allowing for both control and agility.

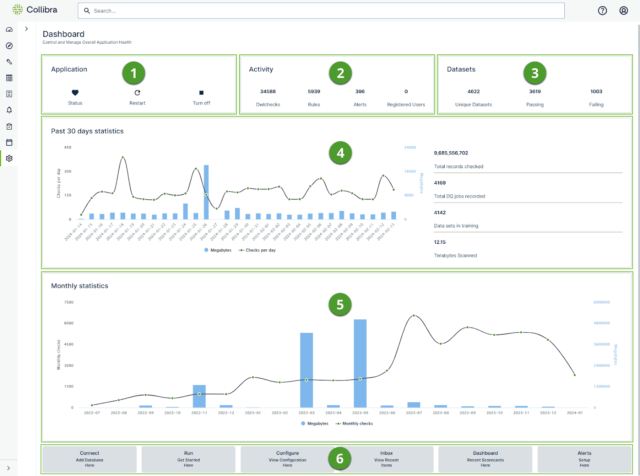

- Collibra

Goal: is an enterprise-grade data governance and cataloging platform that manages data ownership, lineage, rules, and quality across organizations.

Key features:

- A centralized data vocabulary and business vocabulary.

- Automatic data lineage and impact analysis.

- Policy compliance for GDPR, HIPAA, and CCPA.

- Data quality metrics with alerts.

Real-world Example:

AXA, a multinational insurance company, utilizes Collibra to standardise data definitions across locations and provide uniform KPIs for monitoring and compliance. It helps minimize the work required to trace data errors by 70%.

Best practices:

- Create a standard business lexicon early on to help unify technical and non-technical personnel.

- Integrate Collibra over Snowflake, Tableau, or SAP to improve metadata lineage.

- Utilize the Data Quality feature to prioritize remedial procedures.

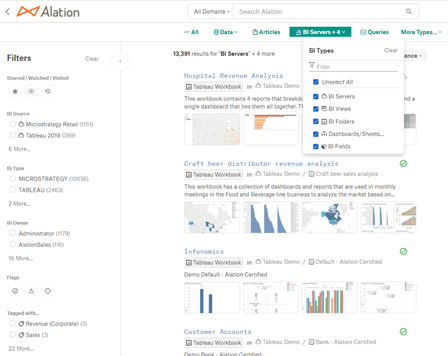

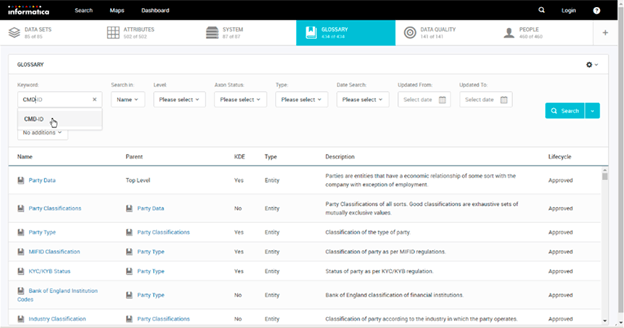

- Alation

Goal: is a collaborative data catalog platform with advanced search, use statistics, and governance features.

Key features:

- Active data governance (documentation generated automatically based on user activity).

- AI-powered searching and labeling.

- Stewardship processes for policy enforcement.

- Integration with business intelligence tools and cloud warehouses.

Real-world Example:

Pfizer utilizes Alation to help researchers and analysts find licensed datasets fast, expediting clinical trials and internal reporting while adhering to regulatory data usage standards.

Best practices:

- Use Alation’s use statistics to determine which data sets are the most important and frequently viewed.

- Encourage the collection of tribal knowledge through crowdsourcing documentation.

- Designate data stewards to track and authorize high-impact assets.

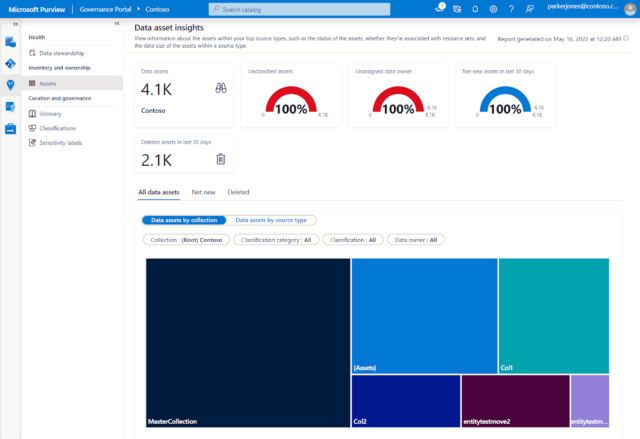

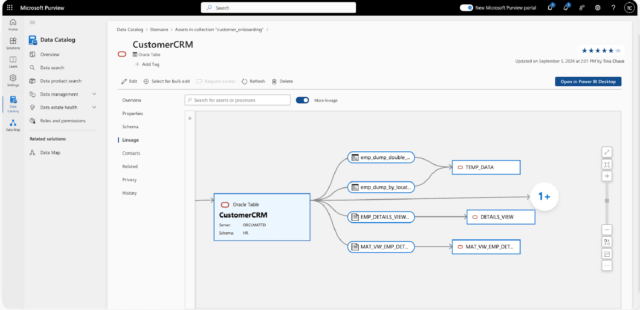

- Microsoft Purview

Goal: A data governance solution that integrates with the Microsoft environment for compliance, exploration, and lineage tracking.

Key features:

- Auto-scan for Azure, Power BI, SQL Server, and Microsoft 365.

- Policy monitoring for sensitivity labeling and data loss prevention.

- Visual lineage illustrations for datasets.

- Glossary terminology and data categorization tags.

Real-world Example:

Heathrow Airport utilizes Microsoft Purview to categorize and monitor sensitive passengers and operational data, guaranteeing GDPR compliance across numerous data platforms in Azure and Microsoft 365.

Best practices:

- Schedule frequent scans to ensure that metadata is up to current across all platforms.

- Use sensitivity labels via Azure Information Protection to control secure sharing.

- Automatically generate policy notifications for DLP infractions and retention concerns.

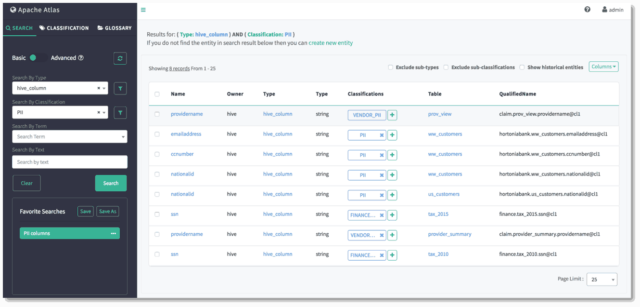

- Apache Atlas

Goal: is an open-source information management and governance solution commonly used in big data environments, particularly alongside Apache Hadoop and Hive.

Key features:

- Metadata indexing and categorization.

- Apache Ranger enables fine-grained access control.

- REST API for integrating with customized data systems.

- Actual-time lineage and audit trails.

Real-world Example:

Hertz incorporates Apache Atlas into its Hadoop environment to monitor the provenance of ETL tasks and enable auditability for internal reporting procedures.

Best practices:

- Integrate with Apache Ranger to handle access control and compliance.

- Validate lineage diagrams in essential pipelines on a regular basis to ensure correctness.

- Extend metadata classification to include sensitive data tagging.

5) Informatica Axon

Goal: A business-centric data governance system that prioritizes teamwork and data stewardship.

Key features:

- Data quality standards and grading.

- Glossary terms and policy connections.

- Integration of Informatica Data Quality with MDM.

- Integration of workflows and dashboards.

Real-world Example:

UBS uses Informatica Axon to standardize regulatory reporting across worldwide divisions by harmonizing company definitions and controls, resulting in increased openness and audit preparedness.

Best practices:

- Integrate Axon with Informatica Data Quality to provide comprehensive governance.

- Enable business users to provide glossary and policy definitions.

- Audit dashboards on a regular basis to ensure their accuracy and stewardship responsibility.

Data Management Strategy Tools Summary Table: Data Governance and Cataloguing Tools Comparison

| Tool | Best For | Deployment | Metadata Lineage | Notable User |

| Collibra | Enterprise-wide governance & quality | Cloud | Yes | AXA |

| Alation | Collaborative catalog + usage analytics | Cloud/Hybrid | Yes | Pfizer |

| Microsoft Purview | Microsoft ecosystem governance | Azure | Yes | Heathrow Airport |

| Apache Atlas | Big data & Hadoop metadata | On-prem/Open-source | Yes | Hertz |

| Informatica Axon | Business-aligned data stewardship | Cloud/On-prem | Yes | UBS |

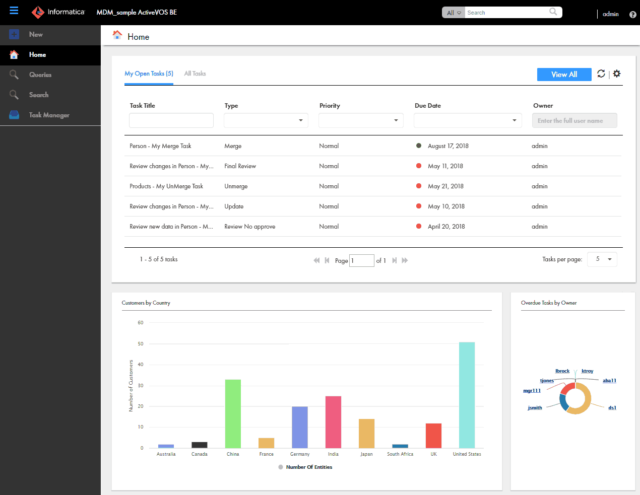

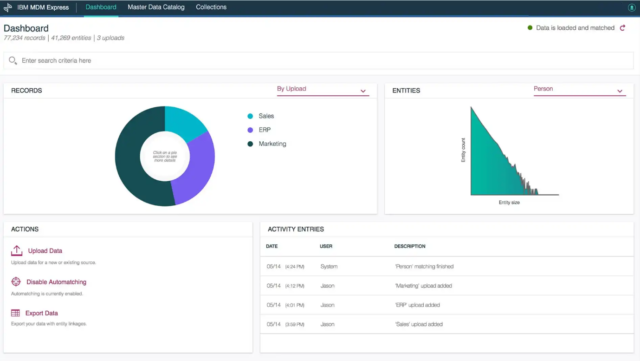

Category 4: MDM (Master Data Management) Tools

Master Data Management (MDM) technologies assist firms in establishing a single, reliable source of truth by combining important company data such as customers, goods, suppliers, and staff. In 2025, MDM platforms will be progressively combined with Artificial intelligence and sync in real-time to serve large-scale operative and analytical use cases.

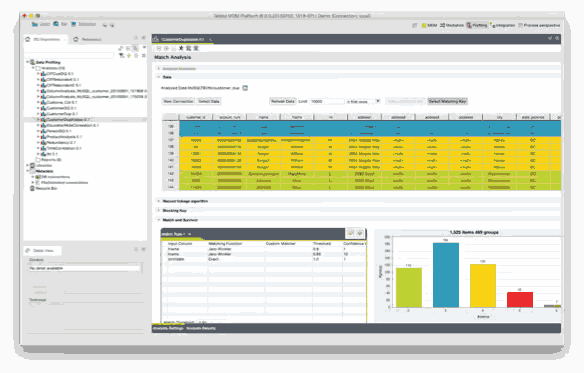

- Informatica MDM:

Goal: A multi-domain solution for managing, cleaning, and synchronizing master data throughout applications.

Key features:

- Support across several domains (customers, products, suppliers, etc.)

- Hierarchy monitoring and relationship modeling.

- Artificial intelligence and machine learning underpin the match-merge rules.

- Integration of Informatica Data Quality, Axon, and cloud services.

Real-world Example:

Unilever utilizes Informatica MDM to integrate product and consumer data across its worldwide business groups. This allows for improved reporting and uniform experiences between e-commerce channels.

Best practices:

- Utilize AI-based matching to eliminate erroneous duplicates in consumer data.

- Integrate master records with ERP and CRM systems.

- Use data stewardship workflows to handle exceptions.

- Reltio

Goal: is a cloud-native development API-first MDM platform that enables real-time data integration and tailored experiences.

Key features:

- Real-time data enrichment via third-party sources.

- Relationships between entities are graph-based.

- Audit trails and GDPR technologies ensure compliance.

- Advanced dashboards and analytics.

Real-world Example:

L’Oréal uses Reltio to connect consumer profiles from online shopping, offline shopping, and CRM data, enabling hyper-personalized marketing and uniform customer experiences across all brands.

Best practices:

- Use Reltio APIs for actual time consumer profile changes in mobile apps.

- Combine with marketing automation to run behaviour-driven campaigns.

- Create combined views of entities to improve segmentation.

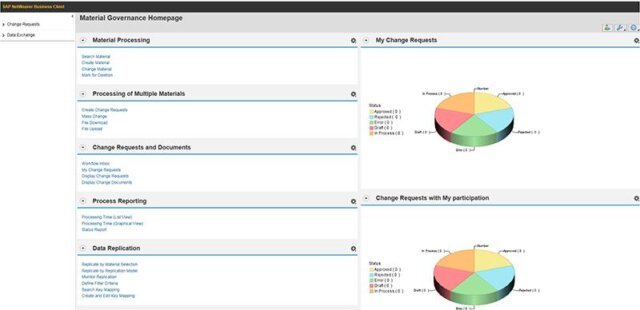

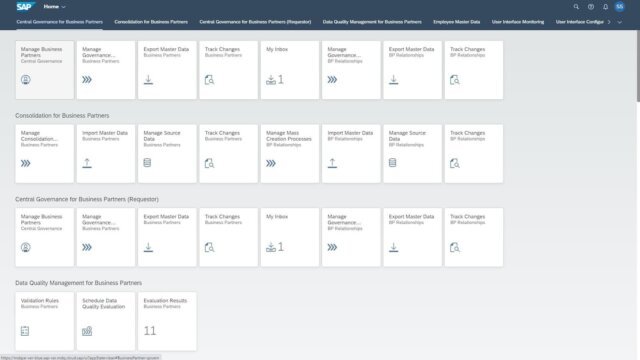

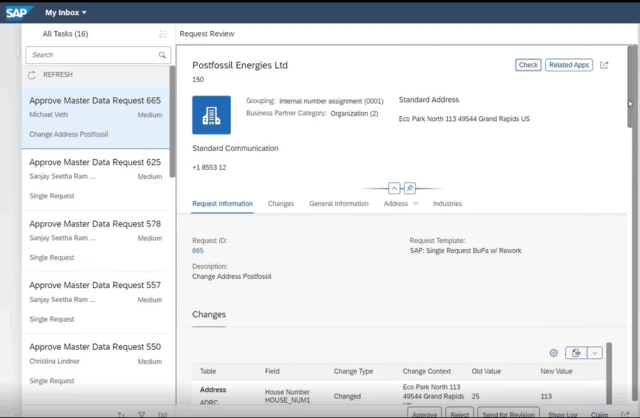

- SAP Master Data Governance (MDG)

Goal: is a centralized governance platform that seamlessly integrates with the SAP environment.

Key features:

- Native connectivity with SAP ERP and S/4HANA.

- Governance of data models is centralized.

- Change the request and confirmation workflows.

- Built-in company protocols and validations.

Real-world Example:

Siemens utilizes SAP MDG to handle product master data via its extensive worldwide engineering systems. This enhances BOM (Bill of Materials) reliability and reduces product development time.

Best practices:

- Use MDG to manage the lifespan of SAP-critical master records.

- Align governance workflows and business process roles.

- Regularly check master record modification logs for compliance.

- Oracle Customer Data Management (CDM)

Goal: Oracle’s MDM solution aims to improve consumer data quality, integration, and governance on the Oracle Cloud.

Key features:

- Deduplication is used to create golden records.

- Data stewardship dashboards and workflow tools.

- Integration of Oracle CX and ERP platforms.

- Models tailored to various industries (such as retail and banking).

Real-world Example:

The Mayo Clinic employs Oracle CDM to provide reliable and safe patient identification information across systems, therefore increasing support for clinical decisions and patient satisfaction.

Best practices:

- Define the data survivorship parameters for Golden Record logic.

- Deploy CDM into Oracle Fusion applications for a consistent experience.

- Use prebuilt models to speed MDM in healthcare and finance.

- Semarchy xDM

Goal: is a low-code, agile MDM platform that enables rapid implementation and empowers business users.

Key features:

- Low-code design of models and user interface.

- Tools for improving data quality and stewardship are included.

- Multi-vector matching engine.

- Flexible deployment options (on-premises, cloud, and hybrid).

Real-world Example:

Red Wing Shoes used Semarchy xDM to harmonize product and store geographical information across retail, inventory, and distribution systems, which cut data onboarding time by 60%.

Best practices:

- Use the data validation procedure to check critical records.

- Iterate fast using low-code UI to meet changing business demands.

- Integrate with BI tools to enable quicker analytics on master data.

Data Management Strategy Tools Summary Table: MDM (Master Data Management) Tools Comparison

| Tool | Best For | Deployment | Real-Time Sync | Notable User |

| Informatica MDM | Enterprise-grade, multi-domain MDM | Cloud/On-prem | Yes | Unilever |

| Reltio | Real-time customer data unification | Cloud-native | Yes | L’Oréal |

| SAP MDG | SAP-centric master data governance | SAP Cloud/On-prem | Limited | Siemens |

| Oracle CDM | Customer data within Oracle ecosystem | Oracle Cloud | Yes | Mayo Clinic |

| Semarchy xDM | Agile, low-code MDM for fast rollout | Cloud/Hybrid | Yes | Red Wing Shoes |

Category 5: ETL (Extract, Transform, Load) Tools

As we have explored these data management strategy tools above, the following table shows its difference from the above description of these tools.

Data Management Strategy Tools Summary Table: ETL (Extract, Transform, Load) Tools Comparison

| Tool | Best For | Deployment | Streaming Support | Notable User |

| Talend | Hybrid ETL + data quality | Cloud/On-prem | Yes | Lenovo |

| Fivetran | Automated ELT for SaaS apps | Fully Cloud | No (batch ELT) | Square |

| Apache NiFi | Real-time & edge ingestion | On-prem/Cloud | Yes | BMW |

| Matillion | In-warehouse transformations (ELT) | Cloud-native | No (ELT focus) | Slack |

| Airbyte | Open-source, flexible ELT | Cloud/Hybrid | Partial (beta) | Angellist |

Category 6: Lakehouse and Warehousing Tools

As we have explored these data management strategy tools above, the following table shows its difference from the above description of these tools.

Data Management Strategy Tools Summary Table: Lakehouse and Warehousing Tools Comparison

| Tool | Best For | Deployment | Notable Strength | Notable User |

| Snowflake | Cloud-native, cross-cloud data warehouse | Fully Cloud | Seamless sharing, scalability | DoorDash |

| Databricks Lakehouse | Unified analytics + AI/ML workloads | Cloud (multi) | Lakehouse + ML + streaming | Shell |

| Google BigQuery | Serverless, scalable SQL analytics | Fully Cloud | ML + geospatial + federated | Spotify |

| Amazon Redshift | Petabyte-scale AWS data warehousing | AWS Cloud | Spectrum + AQUA accelerator | Yelp |

| Azure Synapse | Microsoft ecosystem + BI integration | Azure Cloud | Unified SQL + Spark + BI | Heathrow Airport |

Category 7: Compliance and Governance Tools

By 2025, leading systems will offer fully automated information discovery, lineage monitoring, and policy enforcement at scales.

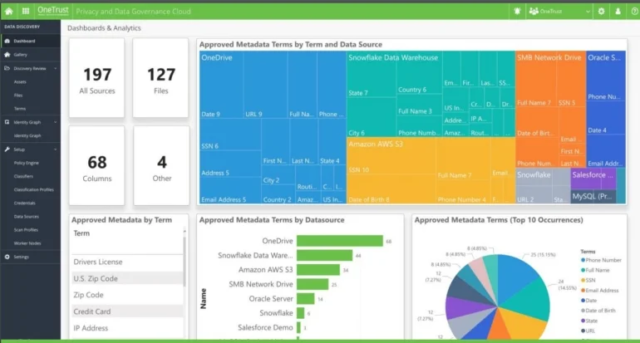

- OneTrust DataGovernance

Goal: is a privacy-first data governance technology that aligns with worldwide compliance standards such as GDPR and CCPA.

Key features:

- Data discovery, categorization, and subject-rights automation.

- Real-time risk assessment and consent management.

- Collaboration with CRM, cloud-based storage, and analytics platforms.

- Compliance automation templates.

Real-world Example:

Nestlé utilizes OneTrust to manage consumer data privacy across locations and to enforce cookie permission across all of its worldwide online assets.

Best practices:

- With automatic DPIAs, you can build compliance into your designs.

- Centralize enforcement of policies for cross-jurisdictional teams.

- Keep authorization logs and opt-in data for each user.

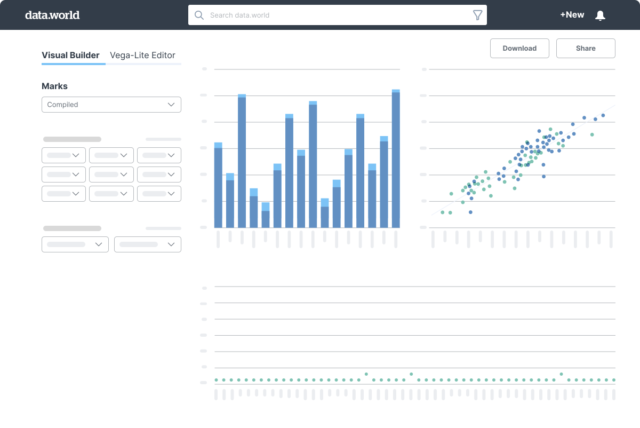

- Data.World

Goal: is a cloud-native data catalog that enables collaboration and agile governance.

Key features:

- Lightweight and user-friendly semantic layer.

- Graph-based knowledge discovery.

- Real-time communication and documentation.

- Integrations with business intelligence tools, warehouses, and APIs.

Real-world Example:

Prologis, a worldwide logistics real estate firm, utilizes Data.World to consolidate property, leasing, and investment information across departments.

Best practices:

- Utilize tagging and semantic models to accelerate data discovery.

- Encourage peer data and documentation sharing.

- Audit query logs for potential policy breaches.

Data Management Strategy Tools Summary Table: Compliance and Governance Tools Comparison

| Tool | Best For | Core Strength | Notable User | Compliance Support |

| OneTrust | Privacy & compliance management | Consent + policy automation | Nestlé | GDPR, CPRA, LGPD |

| Data.World | Agile, collaborative governance | Lightweight + graph-based | Prologis | GDPR, ISO 27001 |

Category 8: Observability and Data Quality Tools

- Monte Carlo

Goal: is a premier data observability technology that analyzes data vitality, volume, schema modifications, and lineage to spot issues early.

Key features:

- Automated anomaly detection and root-cause analysis.

- Alerts for damaged data pipelines.

- End-to-end data lineage visualisation.

- Integrations include Snowflake, Databricks, Airflow, and DBT.

Real-world Example:

Monte Carlo helps Fox Corporation retain trust in ads analytics by identifying schema modifications or null spikes throughout live dashboards.

Best practices:

- Critical tables are monitored utilizing field-level health checks.

- Set alert thresholds for each domain (e.g., finances vs marketing).

- Examine lineage graphs before implementing upstream model modifications.

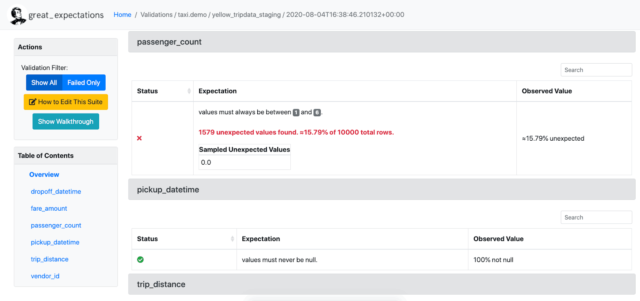

- Great Expectations

Goal: a free application for defining, testing, and documenting data quality standards across pipelines.

Key features:

- Create custom confirmations for nulls, distinctiveness. ranges, and other criteria.

- Human-readable expectation suites that include version control.

- It integrates with Airflow, Spark, Pandas, and DBT.

- Automated documenting for audit and cooperation.

Real-world Example:

Cruise (GM) utilizes Great Expectations to evaluate sensor data by autonomous vehicles before sending it to training models, guaranteeing that no incorrect logs impair predictions.

Best practices:

- Create scalable expectation suites for every business domain.

- Use checkpoints to perform validations at various phases of the pipeline process.

- Version and track expectations using CI/CD workflows.

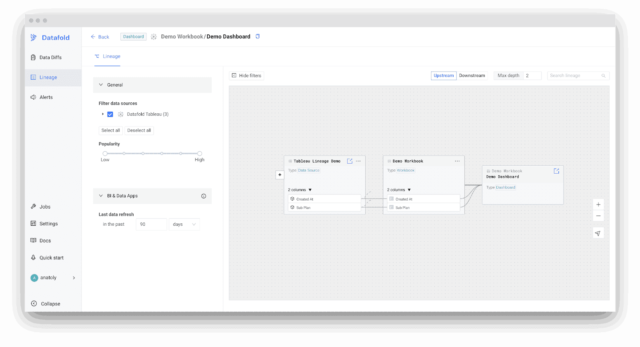

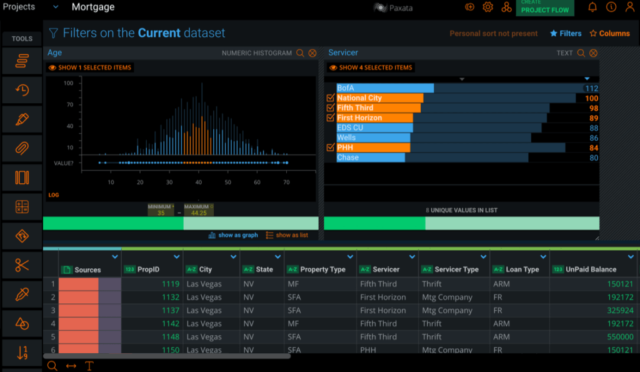

- Datafold

Goal: is a data difference and regression testing application designed for data engineers as well as analytics teams.

Key features:

- Column-level differences and data drift identification.

- Integrations include DBT, Airflow, GitHub, and BigQuery.

- Smart diff previews are provided prior to code mergers.

- Automated testing for regressions with lineage awareness.

Real-world Example:

Drizly employs Datafold for comparing changes in transition logic and avoid data anomalies in BI dashboards and reports.

Best practices:

- Use Git workflows to include tests into each PR.

- Check downstream BI tools for modifications after deployment.

- Validate important metrics during backfilling and schema modifications.

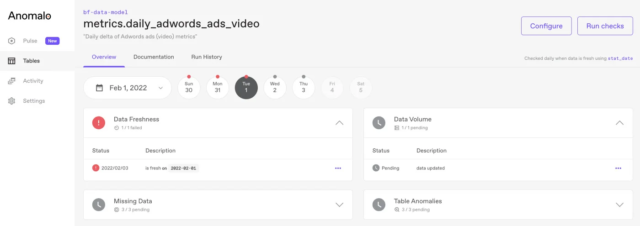

- Anomalo

Goal: is an Artificial intelligence-powered data quality monitoring software that automatically detects and explains abnormalities without requiring any rules or coding.

Key features:

- Automatic profiling and anomaly identification.

- Natural language root cause insights.

- Integrations include Snowflake, Looker, and BigQuery.

- Track health at the dashboard level.

Real-world Example:

Subway utilizes Anomalo to watch worldwide real-time sales and ordering patterns, detecting abnormalities such as POS synchronization problems or promotional data gaps.

Best practices:

- Allow AI to identify unknown unknowns, then tweak feedback loops.

- Integrate notifications into Slack and ticketing platforms.

- Dashboards allow you to immediately monitor key performance indicators (KPIs).

- Talend Data Quality

Goal: GAs part of Talend’s data fabric package, it provides rule-driven data profiling and cleansing features.

Key features:

- Data standardization, enrichment, and de-duplication.

- Custom quality grading and cleaning standards.

- Compatible with Talend Data Integration and Stitch.

- Embedded stewardship workflows.

Real-world Example:

Domino’s Pizza utilizes Talend to clean consumer and order data across all of its global restaurants, maintaining uniformity in loyal and CRM systems.

Best practices:

- Use variable data masking to safeguard personally identifiable information (PII) during cleaning activities.

- Maintain a centralized rule repository that may be reused across domains.

- Include users from business in stewardship workflows to speed up issue resolution.

Data Management Strategy Tools Summary Table: Observability and Data Quality Tools Comparison

| Tool | Best For | Unique Strength | Notable User | Integration Highlights |

| Monte Carlo | Enterprise data observability | Incident monitoring + lineage | Fox Corp | DBT, Snowflake, Airflow |

| Great Expectations | Open-source validation & docs | Code-first testing suites | Cruise (GM) | Spark, Pandas, Airflow |

| Datafold | Data diffs + regression testing | Git-based diffing workflows | Drizly | GitHub, BigQuery, DBT |

| Anomalo | AI anomaly detection | No-code auto-profiling | Subway | Looker, Snowflake |

| Talend Data Quality | Cleansing + enrichment workflows | Integrated with ETL/ELT stack | Domino’s Pizza | Talend Studio, Stitch |

Category 9: Metadata Management and Cataloguing Tools

As the number and diversity of data increase, metadata becomes critical for understanding, finding, and managing data assets. In 2025, new metadata technologies will go beyond documentation to facilitate discovery of data, reliability, and automation using active, immediate insights.

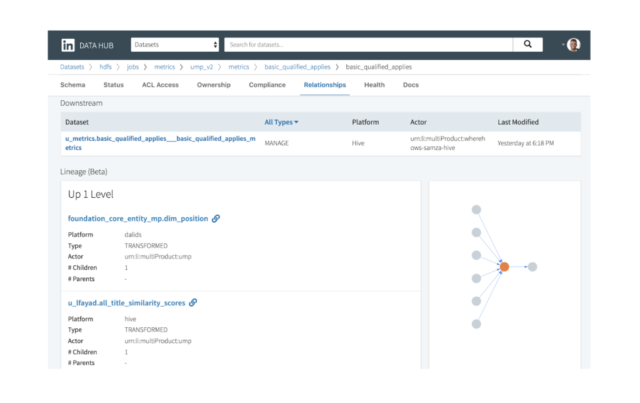

- DataHub (via LinkedIn)

Goal: A modern metadata platform that focuses on active metadata, exploration, and data observability.

Key features:

- Metadata input and search occur in real time.

- Tracking lineage, ownership, and usage.

- Integration with DBT, looker, snowflake, and Kafka, among others.

- Plugin design allows for extensibility.

Real-world Example:

Expedia Group utilizes DataHub to allow thousands of workers to explore, trust, and manage data assets across many travel brands.

Best practices:

- Develop metadata contracts between the engineering and business intelligence teams.

- DataHub metadata may be accessed through dashboards and queries.

- Detect outdated assets automatically using consumption metrics.

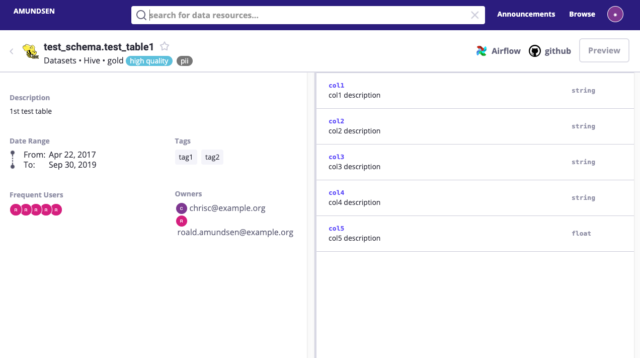

- Amundsen (by Lyft)

Goal: is a portable, search-first metadata platform designed to improve data discovery for teams.

Key features:

- Google-like search with a popularity ranking.

- Metadata at the table and column levels, including lineage.

- Integration with Hive, Presto, Redshift, and Airflow.

- Role-based access restriction and a glossary.

Real-world Example:

Square (Block Inc.) utilizes Amundsen to give reliable data assets to engineers and analysts while also improving self-service analytics usage.

Best practices:

- Synchronize popularity indicators to recommend commonly used items.

- Encourage SMEs to certify and record critical tables.

- Use Slack/Teams connectors to increase discoverability.

- Solidatus

Goal: is a business-level metadata and lineage software program that prioritizes traceability through visuals.

Key features:

- Metadata connections are seen as a dynamic graph.

- Support for business processes and regulatory modelling.

- Bidirectional interfaces with popular data tools.

- Time travel to compare lineages across states.

Real-world Example:

HSBC utilizes Solidatus to address worldwide compliance standards by mapping client data flows across KYC and credit systems.

Best practices:

- Visualise data journeys to identify bottlenecks and dangers.

- Align metadata models alongside business process flows.

- Track lineage versions to determine the regulatory impact gradually.

Data Management Strategy Tools Summary Table: Metadata Management and Cataloguing Tools Comparison

| Tool | Best For | Unique Strength | Notable User | Ecosystem Focus |

| DataHub | Real-time active metadata | Extensible + community-driven | Expedia Group | dbt, Kafka, Snowflake |

| Amundsen | Lightweight metadata discovery | Search-first + simple UX | Square (Block) | Presto, Redshift, Hive |

| Solidatus | Enterprise lineage & traceability | Time-traveling visual models | HSBC | Compliance, finance |

Category 10: Master Data Management Tools

Master Data Management (MDM) systems in 2025 will be connected to one another, Artificial intelligence-driven, and actual time than ever before, allowing for more comprehensive customer experiences and greater governance.

- SAP Master Data Governance (MDG)

Goal: is a solution that integrates with the SAP ERP environment, making it perfect for organizations looking to standardize across SAP landscapes.

Key features:

- Master data governance is centralised throughout SAP S/4HANA and ECC.

- Ready-to-use data models for finance, customers, and materials.

- Workflows are organized by role, and modification requests are tracked.

- Integrated data quality and rule management.

Real-world Example:

3M utilizes SAP MDG to standardize product and financial data across 200+ companies, hence enhancing reporting accuracy and compliance.

Best practices:

- Align governance models with SAP’s business process design.

- Use pre-built validations for financial and material master data.

- Involve SAP business users in the change request procedure.

- IBM InfoSphere MDM

Goal: is an enterprise-grade platform designed for sophisticated MDM needs in regulated sectors like as banking and insurance.

Key features:

- Match engines can be both probabilistic and deterministic.

- Built-in stewardship dashboards and reporting.

- Support for hybrid on-premises and cloud installations.

- Integration of IBM DataStage, Watson, and Cloud Pak.

Real-world Example:

Barclays utilizes IBM InfoSphere MDM to manage and protect client IDs throughout banking channels and compliance systems.

Best practices:

- Use hybrid deployment models in compliance-sensitive environments.

- Apply specific industries match rules to improve accuracy.

- Set up notifications for duplication detection and cleanup.

Data Management Strategy Tools Summary Table: Master Data Management Tools Comparison

| Tool | Best For | Unique Strength | Notable User | Deployment Focus |

| SAP MDG | SAP ecosystem MDM | Prebuilt SAP data models | 3M | Embedded in SAP landscape |

| Reltio | Real-time customer 360 | API-first architecture | Pfizer | SaaS + graph-based |

| Semarchy xDM | Agile, low-code MDM | Rapid deployment & GDPR ready | Carrefour | Cloud + On-prem |

| IBM InfoSphere MDM | Regulated industries (banking) | Complex match + compliance | Barclays | Hybrid (on-prem/cloud) |

Category 11: Data Science Tools

Data science platforms bring together the tools required for data discovery, feature engineering, model development, and deployment. In 2025, the leading platforms will prioritize interaction, automated machine learning (AutoML), and scalability making it easier for data scientists and citizen analysts to extract value from data.

- Databricks

Goal: is a unified analytics platform built on Apache Spark that supports huge-scale data science, ML (machine learning), and real-time analytics.

Key features:

- Delta Lake is for scalable data lakes.

- Collaboration notebooks (Python, R, SQL, and Scala).

- MLflow is used to track experiments and maintain models throughout their lifespan.

- AutoML and native connectivity with Spark and DBFS.

Real-world Example:

Shell utilizes Databricks to create AI models for predicting equipment breakdown and optimizing energy use during its upstream operations.

Best practices:

- Store both organized and unorganized data in Delta Lake for easy access.

- Use MLflow to monitor hyperparameter setting and compare results.

- Use feature retains for reusing features across several projects.

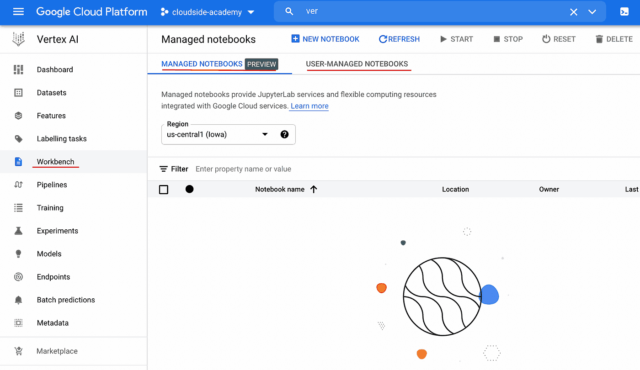

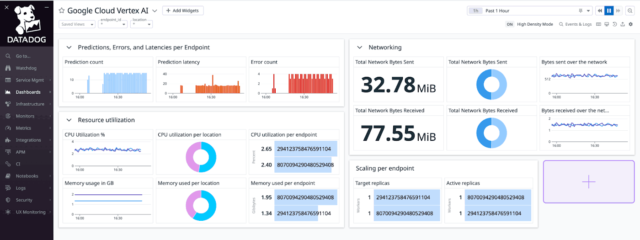

- Google Cloud Vertex AI:

Goal: Managed platform for MLOps and AutoML.

Key features:

- Vertex AI Workbench for Data Scientist Notebooks.

- AutoML for visual, textual, and structured data.

- Built-in experimental tracking, deployment, and monitoring.

- Strong integration with BigQuery, Dataflow, and Looker.

Real-world Example:

Twitter employs Vertex AI to enhance advertising targeting models and identify harmful material at scale via deep learning.

Best practices:

- Use AutoML to establish a baseline model performance for comparability.

- Deploy models using Vertex Endpoints for A/ B testing and CI/CD.

- Pipelines allow you to monitor model drift and retrain your models.

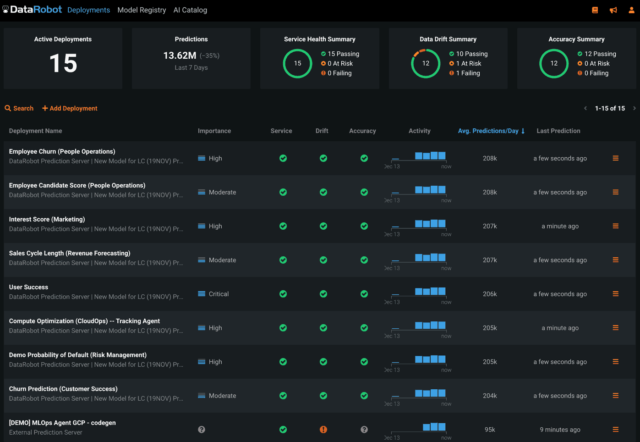

- DataRobot

Goal: is an enterprise-grade AutoML platform that uses explainable AI for quick model generation and deployment with no code.

Key features:

- Automated feature development and model selection.

- Visual AI processes and explainability tools (SHAP and LIME).

- MLOps dashboard, including governance and compliance.

- Capability to forecast time series data.

Real-world Example:

Lufthansa used DataRobot to estimate flight demand, improve routes, and cut operating costs throughout its airline network.

Best practices:

- Engage domain experts early on to verify features and explainability.

- Set criteria for fairness and bias metrics in regulated industries.

- Use DataRobot’s Champion-Challenger architecture to create production models.

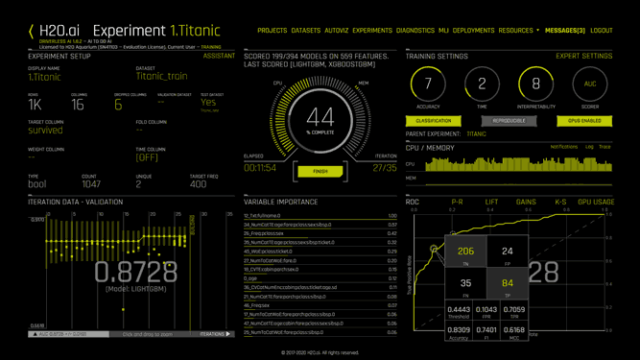

- H2O.AI (Driverless AI)

Goal: The AutoML platform is well-known for its quick model testing, interpretability, and GPU accelerating.

Key features:

- Genetic algorithms for feature engineering.

- SHAP-based model explanations.

- Time series and natural language processing skills.

- Support for Docker and REST API deployments.

Real-world Example:

H2O.ai powers PayPal’s fraud detection algorithms, which handle millions of transaction in near real time.

Best practices:

- Use interpretability reports to help with model governance.

- Optimize pipelines for huge datasets by utilizing GPU acceleration.

- Integrate H2O models with microservices using Docker.

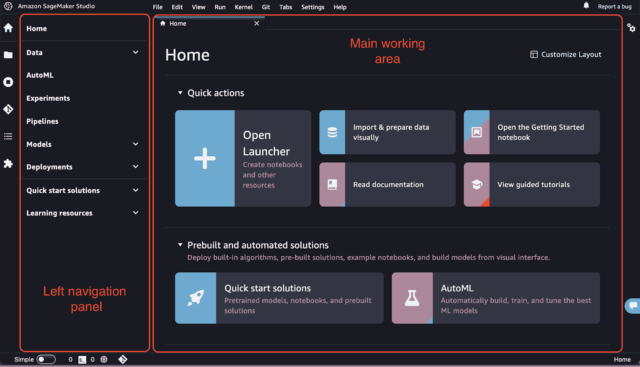

- Amazon SageMaker

Goal: is a fully automated machine learning platform for model construction, training, and implementation on AWS.

Key features:

- Studio IDE, pipelines, and a model monitor.

- Integrated algorithms and support for bespoke containers.

- Ground reality for data labeling.

- Flexible training and hyperparameter tweaking.

Real-world Example:

GE Healthcare utilizes SageMaker to create diagnostic imaging models that help radiologists discover anomalies.

Best practices:

- SageMaker Pipelines provide automated feature engineering and preprocessing.

- Set up model monitoring for identifying data drift after deployment.

- Use IAM roles and encryption to secure your machine learning environment.

Data Management Strategy Tools Summary Table: Data Science Tools Comparison

| Tool | Best For | Unique Strength | Notable User | Cloud/Ecosystem |

| Databricks | Big data + ML at scale | Delta Lake + MLflow integration | Shell | Azure, AWS, GCP |

| Google Vertex AI | AutoML + MLOps | AutoML + BigQuery synergy | Google Cloud | |

| DataRobot | Low-code AI development | Explainable AutoML + Governance | Lufthansa | Platform-agnostic |

| H2O Driverless AI | Interpretability + speed | Genetic feature engineering | PayPal | On-prem + cloud options |

| Amazon SageMaker | Full ML lifecycle on AWS | Native AWS services integration | GE Healthcare | AWS-only |

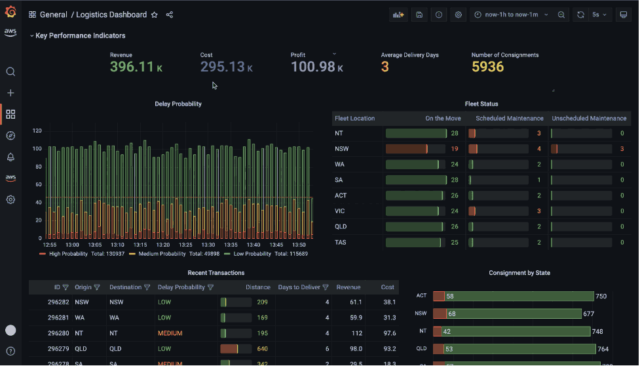

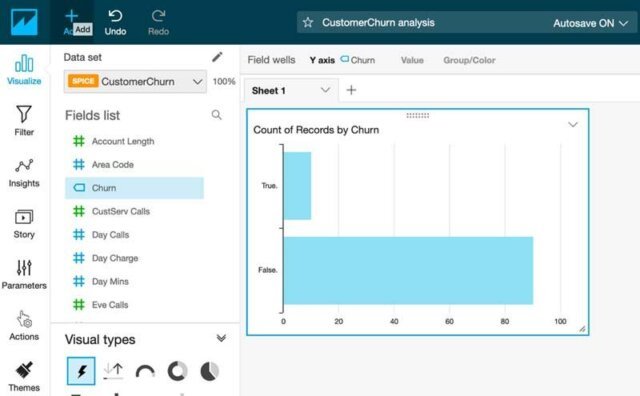

Category 12: Analytics and Business Intelligence (BI) Tools

Analytics and Business intelligence (BI) tools help companies make data-driven choices, visualize data, and unearth insights. In 2025, the emphasis is on, real-time dashboards, embedded insights, augmented analytics, and natural language querying. These platforms now provide AI-powered accessibility to both data specialists and business users.

- Microsoft Power BI

Goal: is a premier BI tool that provides interactive dashboards and easy connectivity with the Microsoft ecosystem.

Key features:

- Drag-and-drop graphics with DAX formula support.

- DirectQuery retrieves real-time data from many sources.

- AI visualizations with natural-language inquiries.

- Tight interface with Excel, Teams, and Azure Synapse.

Real-world Illustration:

Heathrow Airport utilizes Power BI to display passenger flow, airline schedules, and resource allocation in real time, therefore increasing operational efficiency.

Best practices:

- Power BI Gateway provides real time connectivity to on-premises data.

- Integrate dashboards into Microsoft Teams for collaborative insights.

- Aggregation and column store indexing can help you optimize datasets.

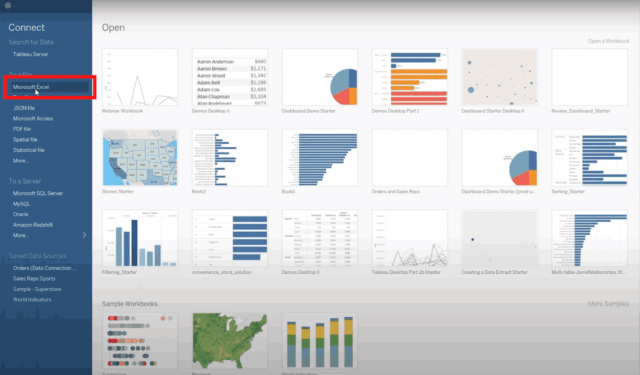

- Tableau

Goal: is a prominent analytics software with easy visualization and storytelling capabilities.

Key features:

- A drag-and-drop dashboard builder with extensive graphing.

- Tableau Preparation for Data Cleaning.

- Extensions and APIs for customized interaction.

- AI-powered “Ask Data” and “Explain Data” functionalities.

Real-world Illustration:

Caterpillar used Tableau to analyze IoT equipment data, spot abnormalities, and show operational KPIs across worldwide divisions.

Best practices:

- Create dashboards with activities and parameters for interactive examination.

- Tableau Extracts provide quick performance with huge datasets.

- Create calculated fields to enhance visual storytelling.

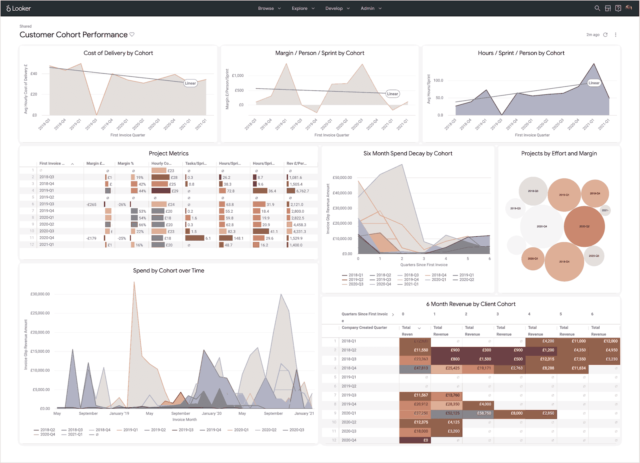

- Looker (Google Cloud)

Goal: A modern BI platform built on LookML (data modeling language), perfect for developing centralized, scalable analytics logic.

Key features:

- LookML facilitates semantic modeling.

- Integration with BigQuery, Snowflake, and Redshift.

- Analytics and dashboards are integrated into online applications.

- Enterprise-grade data exploration that is governed and scalable.

Real-world Illustration:

BuzzFeed utilizes Looker to measure reader engagement, content performance, and ad effectiveness across its editorial and marketing departments.

Best practices:

- Define centralized metrics in LookML to ensure consistency.

- Use Looker Blocks to create reusable dashboards across teams.

- Implement row-level security to provide tailored data access.

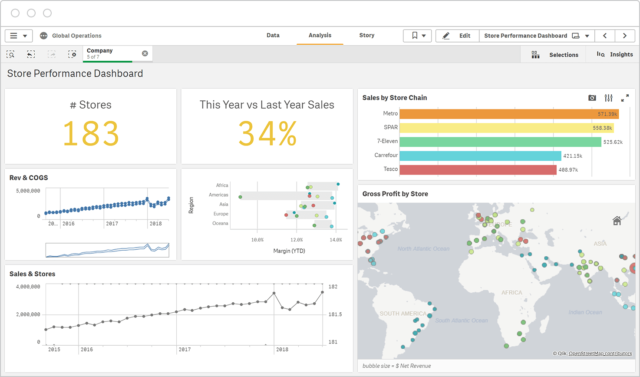

- Qlik Sense

Goal: is an associative BI platform that provides real-time analytics and AI-based insights.

Key features:

- The associative engine facilitates intuitive drilldowns.

- Insight Advisor offers augmented analytics.

- Open APIs provide embedded analytics.

- Intelligent search and visual storytelling capabilities.

Real-world Illustration:

Siemens used Qlik Sense to monitor production performance and machine health in plants across continents.

Best practices:

- Use Insight Advisor to get guided analysis ideas.

- Use Qlik APIs to create mashups for bespoke UI dashboards.

- Use Qlik’s associative model to investigate “unknown unknowns.”

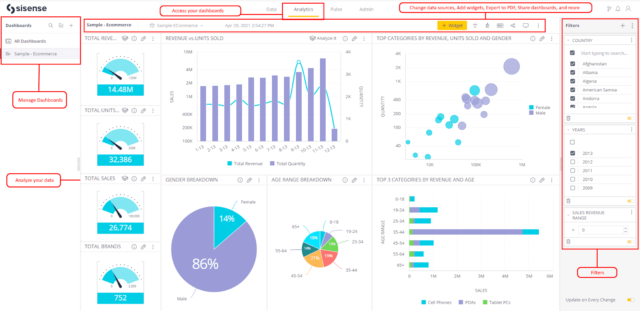

- Sisense

Goal: A robust BI and analytics platform with embedded analytics and developer-friendly features.

Key features:

- Full-stack business intelligence using the ElastiCube in-memory engine.

- Integrating analytics with SaaS products or portals.

- Dashboards now natively support AI/ML models.

- REST APIs and the JavaScript SDK provide extensibility.

Real-world Illustration:

Air Canada employs Sisense to provide flight data analytics into internal apps, hence improving turnaround periods and passenger service metrics.

Best practices:

- For creating custom visualization widgets, use Sisense Blox.

- Integrate dashboards with operational tools to gain contextual information.

- Create alerting rules concerning threshold violations using Pulse.

Data Management Strategy Tools Summary Table: Data Science Tools Comparison

| Tool | Best For | Unique Strength | Notable User | Deployment Type |

| Power BI | Microsoft ecosystem + real-time data | NLU queries + Teams/Excel integration | Heathrow | Desktop, Cloud, Hybrid |

| Tableau | Visual exploration & dashboards | Explain Data + strong storytelling | Caterpillar | On-prem, Cloud |

| Looker | Semantic models + embedded analytics | LookML for metric consistency | BuzzFeed | Cloud-first (GCP) |

| Qlik Sense | Associative analytics + smart search | In-memory associative engine | Siemens | Cloud, On-prem |

| Sisense | Embedded analytics + AI/ML in UI | Developer-first BI stack | Air Canada | Cloud, Hybrid |

Category 13: Cloud Data Tools

Cloud data platforms and warehouses constitute the foundation of current data management techniques. In 2025, these systems will focus scaling, analytics in real time, cross-cloud interoperability, and unified governance. They provide massive storage of data, lightning-fast searching, and frictionless sharing of data, all of which are critical for enterprises that manage diverse and dispersed data.

- Microsoft Azure Synapse Analytics

Goal: is a unified analytics solution that combines big data, SQL warehousing, and Spark for real-time analysis.

Key features:

- Serverless SQL pools and supplied pools.

- Built-in Spark engine and pipeline orchestration.

- Deep integration with Power BI and Microsoft Purview.

- Strong security (AAD, RBAC, and dynamic masking).

Real-world Illustration:

Marks & Spencer employs Synapse Analytics to combine data from e-commerce, POS, and supply chain systems to gain omnichannel insights.

Best practices:

- Optimize costs by combining serverless and provided queries.

- Integrate with Microsoft Purview to manage data cataloguing and lineage.

Data Management Strategy Tools Summary Table: Cloud Data Tools Comparison

| Platform | Best For | Key Differentiator | Notable User | Deployment Type |

| Snowflake | Multi-cloud, scalable warehousing | Seamless cross-org data sharing | PepsiCo | Cloud (AWS, GCP, Azure) |

| Google BigQuery | Real-time, serverless analytics | Built-in ML and integration with GCP stack | Spotify | Cloud (Google Cloud) |

| Amazon Redshift | Scalable SQL + data lake analytics | AQUA + tight AWS integration | McDonald’s | Cloud (AWS) |

| Azure Synapse Analytics | Unified analytics on Microsoft stack | SQL + Spark + BI integration | Marks & Spencer | Cloud (Azure) |

| Databricks Lakehouse | Unified lakehouse for BI + ML | Delta Lake + MLflow + Lakehouse Federation | HSBC | Cloud, Hybrid |

Category 14: Master Data Management Tools

Master Data Management (MDM) will feature real-time synchronization, multi-domain governance, AI-driven data matching, and cloud-native scalability.

- SAP Master Data Governance (MDG)

Goal: is an enterprise-grade MDM platform integrated with the SAP ecosystem to manage master data across SAP and non-SAP applications.

Key features:

- Finance, material, consumer, and vendor data are all governed centrally.

- Integrate with SAP S/4HANA, ECC, and Ariba.

- Change the request and approval workflows.

- Data replication and validation logic.

Real-world Illustration:

Unilever uses SAP MDG to manage its worldwide product catalog and optimize data integration across procurement and logistics divisions.

Best practices:

- Define central versus federated governance frameworks based on data domain.

- SAP Business Workflow automates change request procedures.

- Use MDG analytics to track master data KPIs and SLAs.

- IBM InfoSphere MDM

Goal: is an enterprise-grade MDM solution for operational and analytical use cases, with hybrid and on-premises deployment options available.

Key features:

- There are two types of matching algorithms: probabilistic and deterministic.

- Real-time data access and virtual MDM capabilities.

- Workflow-driven data stewardship.

- Integration with the Watson Knowledge Catalog and IBM Cloud Pak.

Real-world Illustration:

TD Bank utilizes IBM InfoSphere MDM to ensure accurate client profiles throughout its retail and investment banking businesses, resulting in consistent customer experiences.

Best practices:

- Use InfoSphere’s suspect processing to handle doubtful matches.

- Create stewardship dashboards to monitor open tasks and data abnormalities.

- Integrate IBM DataStage for ETL

- Stibo Systems STEP

Goal: is a multi-domain MDM platform for managing product, supplier, and customer data.

Key features:

- Excellent product information management (PIM) capabilities.

- Workflow orchestration for enterprise users.

- APIs and connectors are ready for integration.

- Configurable data modeling and taxonomy management.

Real-world Illustration:

Adidas uses Stibo STEP to integrate and maintain product as well as supplier data throughout many global markets, providing omnichannel uniformity and speed to market.

Best practices:

- Create data hierarchies for each domain.

- Automate the onboarding of supplier/product records using validation criteria.

- Use built-in audit trails to ensure regulatory compliance.

Data Management Strategy Tools Summary Table: Master Data Management Tools Comparison

| Tool | Best For | Key Differentiator | Notable User | Deployment Type |

| Informatica MDM | Multi-domain, AI-powered MDM | ML-driven golden record creation | GE Healthcare | Cloud, On-prem, Hybrid |

| SAP MDG | SAP-centric enterprises | Tight integration with SAP ERP | Unilever | On-prem, Cloud (SAP BTP) |

| Reltio | Cloud-native, API-first architecture | Real-time unification + graph analytics | Pfizer | SaaS, Cloud-native |

| IBM InfoSphere MDM | Hybrid enterprise needs | Probabilistic matching + stewardship tools | TD Bank | On-prem, Hybrid |

| Stibo Systems STEP | Product-focused MDM | Strong PIM + taxonomy management | Adidas | Cloud, On-prem |

Category 15: Machine Learning and Data Science Tools

Data science and machine learning (ML) technologies are critical for converting raw data into predicted insights. In 2025, top platforms will provide seamless interaction, end-to-end MLOps, scalable model training, and automated feature engineering with contemporary data stacks, allowing organizations to operationalise Artificial Intelligence quicker and more reliably.

- Google Vertex AI

Goal: is a managed MLOps platform that integrates model development, training, and deployment.

Key features:

- AutoML and custom model training using scalable infrastructure.

- Pipelines are pre-built, and the feature store is handled.

- Vertex Explainable AI promotes transparency.

- Integration with BigQuery and Looker.

Real-world Illustration:

Wayfair employs Vertex AI to personalize suggestions based on consumer activity data, resulting in considerably higher click-through and conversion rates.

Best practices:

- Use controlled datasets for version control and repeatability.

- If you have minimal data science resources, use AutoML to analyse structured and image data.

- AWS SageMaker

Goal: is a powerful machine learning platform with capabilities for all stages of the ML lifecycle.

Key features:

- Built-in algorithms, model tweaking, and training infrastructure.

- SageMaker Studio is for development and visualization.

- Model Monitor and Clarify identify bias and drift.

- Data Wrangler for feature engineering.

Real-world Illustration:

Intuit employs SageMaker to power real-time fraud detection models for the TurboTax and QuickBooks platforms.

Best practices:

- SageMaker Pipelines are ideal for repeatable model deployment.

- SageMaker Model Monitor allows you to track the status of deployed models.

- Make use of the built-in notebooks to swiftly prototype.

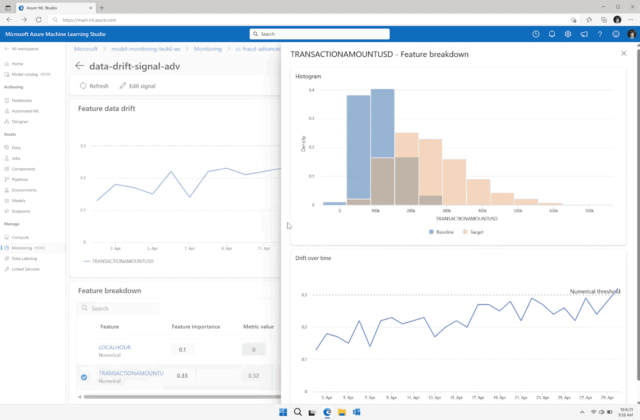

- Microsoft Azure Machine Learning

Goal: is an enterprise-grade MLOps platform that integrates with the Azure ecosystem to enable AI development.

Key features:

- A drag-and-drop designer for no-coding workflows.

- Integrated responsible Artificial Intelligence toolkits (bias, fairness, and explainability).

- MLflow integration and support for Azure DevOps.

- Compute cluster for scalable training.

Real-world Illustration:

Rolls-Royce uses Azure ML to develop maintenance prediction models utilizing jet engine telemetry data, which reduces flight delays and downtime.

Best practices:

- Register datasets and models to provide complete traceability.

- Use the Responsible AI dashboard prior to deployment.

- Integrate Azure DevOps to automate CI/CD of machine learning models.

- DataRobot

Goal: is an autonomous machine learning (AutoML) and MLOps software that enables businesses to quickly deploy correct models.

Key features:

- Training and benchmarking models with a single click.

- Forecasting of time series and anomaly detection tools.

- Bias and compliance tracking is built-in.

- Business-friendly infographics and what-if scenarios.

Real-world Illustration:

Lenovo used DataRobot to estimate supply chain demand, dynamically modifying inventory and logistics to reduce costs and improve efficiency.

Best practices:

- The Leaderboard allows you to compare models and choose the best algorithms.

- Use guardrails to avoid overfitting and promote fairness.

- Plan model retraining to prevent idea drift in time-sensitive domains.

Data Management Strategy Tools Summary Table: Machine Learning and Data Science Tools Comparison

| Platform | Best For | Key Differentiator | Notable User | Deployment Type |

| Databricks | End-to-end lakehouse ML workflows | MLflow + Spark + Delta Lake | Shell | Cloud, Hybrid |

| Google Vertex AI | Scalable MLOps with GCP integration | AutoML + Explainable AI + BigQuery synergy | Wayfair | Cloud (Google Cloud) |

| AWS SageMaker | Full ML lifecycle with managed services | Studio, Model Monitor, and Data Wrangler | Intuit | Cloud (AWS) |

| Azure Machine Learning | Enterprise AI with governance tools | Responsible AI + Azure integration | Rolls-Royce | Cloud (Azure) |

| DataRobot | Fast, accessible AutoML for business | Time series, visual AI, and compliance guardrails | Lenovo | SaaS, Cloud, On-prem |

Bringing It All Together: The Power of an Integrated Data Tool stack.

As we’ve seen across these 15 critical categories, developing a contemporary data management strategy in 2025 requires more than just selecting one or two platforms; it’s about orchestrating a linked ecosystem of best-in-class solutions. Each tool serves a specific role, such as integrating and storing multiple data sources at scale, as well as managing usage, assuring quality, modelling relationships, allowing insights, and powering AI-driven choices.

However, the true benefit does not come only from picking the “top tools” in isolation. The competitive advantage comes from how well these technologies function together—with clear interaction points, shared governance norms, centralized metadata, and consistent data quality standards. This alignment promotes speedier innovation, scalable compliance, and consistent decision-making across departments.

With the appropriate balance of these 15 categories, you can create a data-driven culture that is flexible, intelligent, and long-lasting.

Data Management Strategy Tool Alignment with Business Strategy

The alignment of data management with business strategy is among the most neglected components of data management. The resources you select should help with important projects like:

| Business Goal | Data Tool Capability Needed |

| Entering new markets | Multilingual metadata, scalable cloud warehousing |

| Improving customer experience | Real-time analytics, 360-degree customer view |

| Cost reduction | Data quality automation, storage optimization |

| Innovation (AI/ML readiness) | Centralized, accessible, and well-governed datasets |

Example:

For instance, a logistics business seeking to improve delivery routes can forecast and reroute based on traffic and weather by combining real-time analytics (Databricks or BigQuery) with streaming data integration (Apache Kafka).

Cloud vs. On-Premise vs. Hybrid Tools: Flexibility in Deployment

Performance, cost, and compliance all depend on selecting the appropriate deployment model:

| Deployment Model | Best For | Example Tools |

| Cloud | Scalability, modern apps, lower CAPEX | Snowflake, BigQuery, Talend Cloud |

| On-Premise | Legacy systems, data sovereignty | Informatica PowerCenter, Oracle |

| Hybrid | Gradual cloud adoption, flexible compliance | Microsoft Azure Synapse, IBM Cloud Pak |

Tip:

A hybrid configuration can facilitate migration while preserving on-premises mission-critical workloads.

KPIs (Key Performance Indicators) for Assessing the Effectiveness of Data Management Strategy Tools

You should keep an eye on these KPIs to demonstrate ROI and gauge the efficacy of the tool:

| KPI | Why It Matters |

| Data Accuracy Rate (%) | Ensures trustworthy analysis |

| Time-to-Insight (TTI) | Measures analytics agility |

| Data Issue Resolution Time | Tracks responsiveness to data quality problems |

| Data Access Requests Fulfilled (%) | Indicates accessibility and democratization |

| Compliance Audit Pass Rate | Demonstrates governance maturity |

Example:

An example of a quantifiable success indicator would be a 30% decrease in Time-to-Insight following the implementation of Tableau with Snowflake.

Typical Patterns of Integration with Data Management Strategy Tools

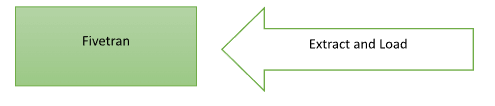

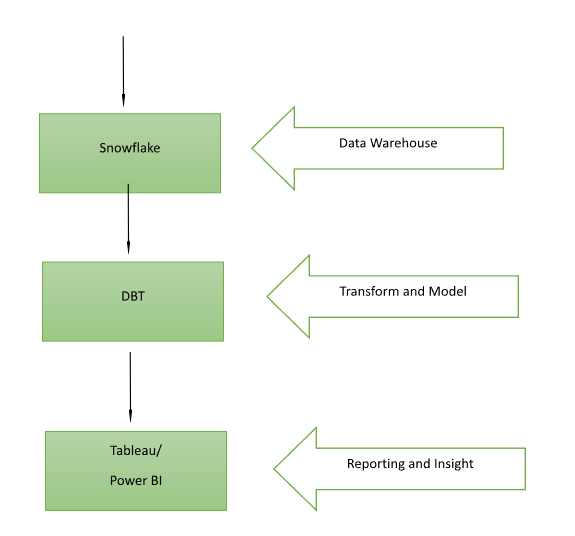

Imagine how different data management strategy tools combine to form a cohesive approach to data management strategy:

This flow chart demonstrates how tools cooperate and link while each performing a specific function.

A New Development in Data Management Strategy Tool Orchestration: DataOps

Traditional data management techniques frequently fail in the fast-paced, data-driven environment of today. DataOps is a new approach that aims to improve data operations by introducing automation, agility, and continuous delivery, much like DevOps did for the development of software.

DataOps uses Agile, Lean, and DevOps ideas to make data pipelines repeatable, dependable, and change-adaptable rather of seeing them as static, one-time projects.

Typical DataOps Stack Tools

| Function | Tool Examples |

| Orchestration | Apache Airflow, Prefect, Dagster |

| Transformation (T in ELT) | dbt (Data Build Tool), Spark, SQLMesh |

| Version Control | Git, GitLab, Bitbucket |

| Testing & Validation | Great Expectations, Soda, Deequ |

| Monitoring & Observability | Monte Carlo, Databand, OpenLineage |

| CI/CD for Data Pipelines | Jenkins, CircleCI, GitHub Actions |

The Role of DataOps in Your Data Management Plan

| Strategy Component | How DataOps Enhances It |

| Data Integration | Automates ETL/ELT pipelines with reusable code and templates |

| Data Quality | Ensures checks and balances through embedded test suites |

| Data Governance | Offers audit logs and lineage visibility across workflows |

| Analytics | Speeds up deployment of trusted, versioned models |

Real-World Flow Example:

Source Systems Fivetran Snowflake

The Best Practices to Use Data Management Strategy Tools

- Establish Specific Goals

Align tools with certain company objectives, such as enhancing compliance or lowering attrition.

- Begin Small, Grow Quickly

Start with areas that will have the most impact (such customer data), then branch out across departments.

- Engage Stakeholders Eary

Early on in the tool decision and design phase, involve the business, IT, and compliance teams.

- Automate Wherever Possible

Automate metadata updates, data quality checks, and ETL.

- Audit And Optimize on a Regular Basis

Track tool performance and data utilization, and make frequent process improvements.

Real-World Example: Mid-Sized Enterprise’s Data Management Strategy Stack

| Tool | Purpose |

| Fivetran | Data integration from SaaS apps |

| Snowflake | Cloud data warehouse |

| Collibra | Governance, glossary, policy control |

| Informatica DQ | Data profiling and cleansing |

| Power BI | Business intelligence and dashboards |

This stack facilitates end-to-end data utilization, visibility, and trust in operations, finance, and marketing.

Criteria for Tool Selection: Going Beyond Buzzwords

Consider more than just features when selecting a data management strategy tool:

| Criteria | Questions to Ask |

| Scalability | Can it grow with your data volume and user base? |

| Interoperability | Does it connect with your existing tech stack? |

| Governance Fit | Does it support your policy, lineage, and audit needs? |

| User Experience | Is it friendly for both technical and business users? |

| Vendor Support & Roadmap | Are updates frequent, and is support responsive? |

Bonus: Resources to Expand Your Understanding

For individuals who wish to learn more, provide citations or links to top industry resources:

DAMA-DMBOK Framework:

The corporate data management foundation

Gartner Magic Quadrant:

For category-based tool evaluation

MIT CDOIQ Symposium:

An international gathering for data strategy and leadership

The DataOps Manifesto:

Agile Data Operations Principles

AI-Powered Data Management Tools: The Future

Artificial intelligence and machine learning are increasingly integrated into modern systems to improve data management:

| Capability | How AI Helps | Tool Example |

| Anomaly Detection | Flags irregular patterns in real-time | IBM Watson, DataRobot, BigID |

| Metadata Tagging | Auto-classifies datasets using NLP | Atlan, Alation, Informatica CLAIRE |

| Data Cleansing | Suggests corrections or matches using ML | Trifacta, Talend Data Prep |

| Predictive Lineage | Maps out undocumented transformations automatically | Manta, Octopai |

Pro Tip: Seek out tools with AI/ML modules integrated into them rather than merely add-ons. This guarantees smooth automation and quicker insights.

The Top Techniques for Developing a Successful Data Management Stack

To close, keep in mind these important recommended practices:

| Area | Best Practice |

| Tool Selection | Choose tools based on interoperability, scalability, and future-proofing. |

| Data Governance | Establish clear ownership, policies, and roles for every data domain. |

| Cloud Strategy | Prioritize hybrid or multi-cloud compatibility for agility. |

| Integration | Automate data movement and transformation pipelines as much as possible. |

| MLOps & BI Alignment | Ensure analytics and ML platforms are tightly connected to data governance. |

| Skills Development | Invest in upskilling teams across engineering, science, and business units. |

| Continuous Monitoring | Track data quality, performance, and drift continuously across the pipeline. |

What to Do Next

Now is the moment to take action. Begin by completing a gap analysis of your existing data infrastructure. Determine which of these 15 categories are strong, where you are underinvested, and where your staff are experiencing operational friction. Use this knowledge to prioritize your future technology investments—tools that integrate smoothly, scale easily, and are aligned with your company objectives.

Remember that technology alone does not bring transformation. Success is determined by how well these tools assist your people, processes, and governance procedures. A clear data management strategy, supported by the appropriate technologies, promotes quicker innovation, better customer experiences, and more informed choices throughout the company.

The tools are right there, and so is your path. Whether you’re improving current systems or starting from scratch with your data stack, the moment to act is now. Evaluate your present skills, identify gaps within these 15 tool categories, and start aligning your technology decisions with your long-term data strategy. Remember that data-driven success begins not through more data, but with wiser tools and strategies.

A strong data strategy begins with a vision and is maintained via operation, culture, and cross-team cooperation. It doesn’t stop with tool purchases. Make use of this guide to:

- Examine your existing data stack.

- Determine any gaps in the 15 categories.

- Set investment priorities according to your company’s objectives.

- Make a plan for data management that is safe, scalable, and insight-driven.

The future will be shaped by the companies that use data to make decisions and insights to take action. That future may be yours if you use the right data management strategy tools and tactics in 2025.

Conclusion: Using the Proper Tools to Coordinate the Future of Data

A data management strategy tool is a business enabler as well as an IT solution.

Data is the very currency of digital transformation in 2025, not simply an asset. Successful organizations manage, administer, secure, and leverage data through a purposeful, well-designed data management strategy that is supported by the appropriate technologies throughout the data lifecycle.

We have examined 15 key tool categories that together make up the contemporary data stack, ranging from the ingestion pipelines of Fivetran and the cloud warehousing power of Snowflake to the production of golden records in Informatica MDM and the predictive modelling in Databricks. Each has a distinct, vital role in facilitating:

- Real-time insights derived from streams of dynamic data

- Adherence to regulations in progressively complex settings

- Using scalable, controlled machine learning processes to prepare for AI

- Interdepartmental cooperation among business teams, scientists, analysts, and data engineers

Top-performing companies in 2025 will be distinguished not just by the technologies they employ but also by how effectively those tools integrate with one another, how strategically they are managed, as well as how data-literate the company has grown.